1.用户行为数据采集;

(1).大数据集群的搭建:hadoop、zookeeper、kafka、hbase;

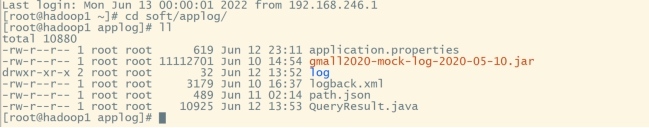

(2).模拟数据上传;

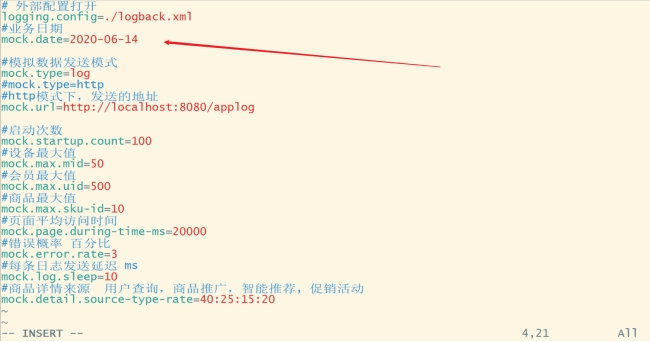

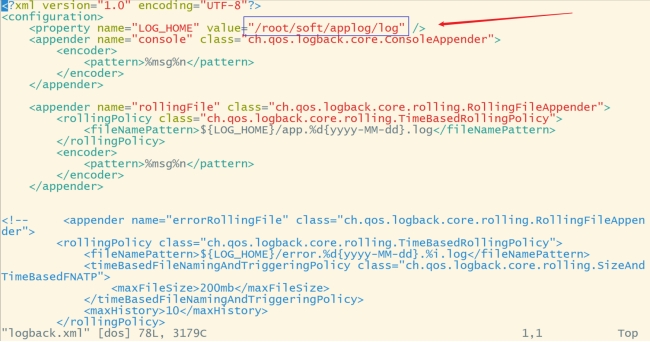

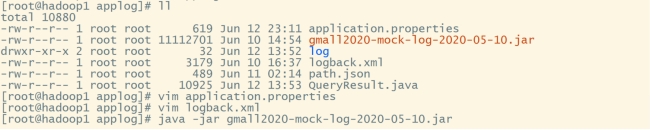

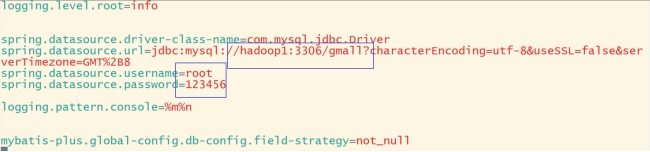

(3).修改配置文件application.properties、logback.xml;

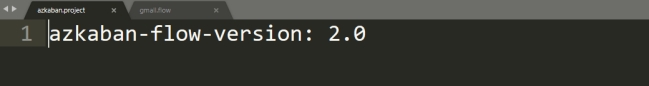

# (数仓4.0为yml文件)

vim application.properties

vim logback.xml

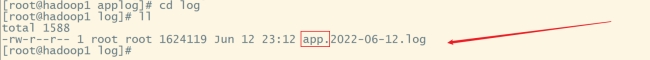

(4).生成日志并查看日志;

java -jar gmall2020-mock-log-2020-05-10.jar

# 进入log文件夹

cd log

# 查看

ll

(4).分发applog;

# 进入applog所在目录

cd /root/soft

# 分发

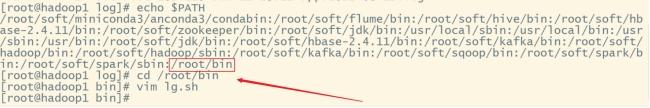

xsync applog(5).编写集群日志生成脚本;

echo $PATH

vim lg.sh

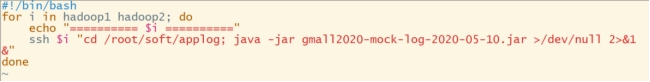

#!/bin/bash

for i in hadoop1 hadoop2; do

echo "========== $i =========="

ssh $i "cd /root/soft/applog; java -jar gmall2020-mock-log-2020-05-10.jar >/dev/null 2>&1 &"

done

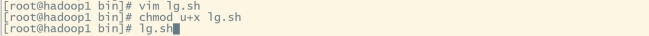

为脚本赋执行权限并启动脚本:chmod u+x lg.sh

查看hadoop2的log文件是否生成日志:cd /root/soft/applog/log

(6).数据采集模块;

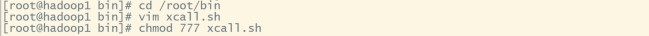

编写集群所有进程查看脚本并赋权限:

cd /root/bin

vim xcall.sh

chmod 777 xcall.sh

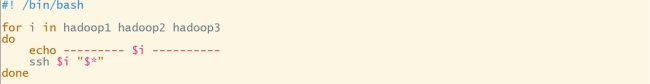

脚本内容:

#! /bin/bash

for i in hadoop1 hadoop2 hadoop3

do

echo --------- $i ----------

ssh $i "$*"

done

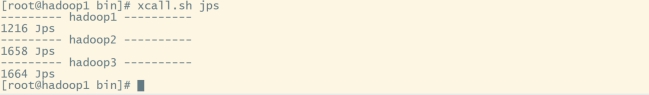

启动脚本:xcall.sh jps

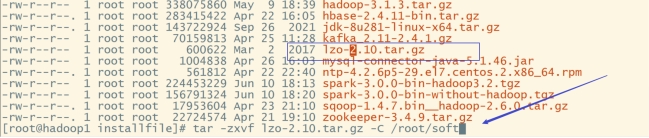

(7).LZO压缩配置;

#下载wget:

yum -y install wget

#下载gcc-c++、zlib-devel、autoconf、automake、libtool:

yum -y install gcc-c++ lzo-devel zlib-devel autoconf automake libtool

#下载、安装并编译LZO:

wget http://www.oberhumer.com/opensource/lzo/download/lzo-2.10.tar.gz

#解压:

tar -zxvf lzo-2.10.tar.gz -C /root/soft

切换lzo目录并运行以下命令: cd /root/soft/lzo-2.10/

./configure -prefix=/root/soft/hadoop/lzo/

make

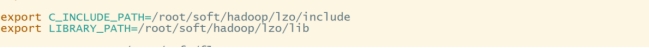

make install声明两个临时变量并使环境变量生效:vim ~/.bashrc source ~/.bashrc

export C_INCLUDE_PATH=/usr/local/hadoop/lzo/include

export LIBRARY_PATH=/usr/local/hadoop/lzo/lib

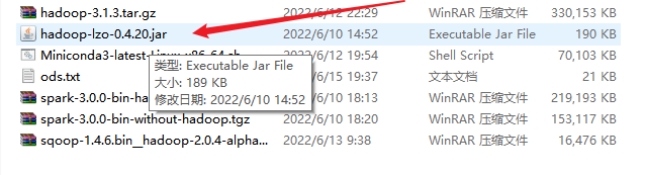

上传hadoop-lzo-0.4.20.jar并放入hadoop下的common:

mv hadoop-lzo-0.4.20.jar /root/soft/hadoop/share/hadoop/common

#切换到common目录:

cd /root/soft/hadoop/share/hadoop/common

#分发hadoop-lzo-0.4.20.jar:

xsync hadoop-lzo-0.4.20.jar

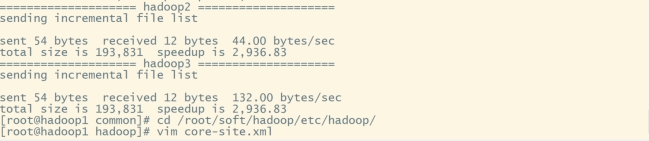

#切换到core-site.xml增加支持LZO配置:

cd /root/soft/hadoop/etc/hadoop/编辑core-site.xml:vim core-site.xml

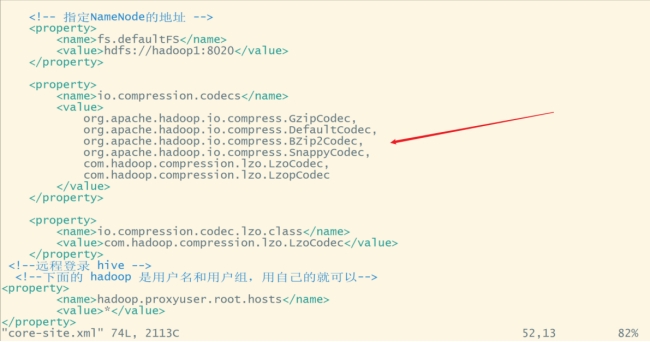

<property>

<name>io.compression.codecs</name>

<value>

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

#分发core-site.xml:

xsync core-site.xml

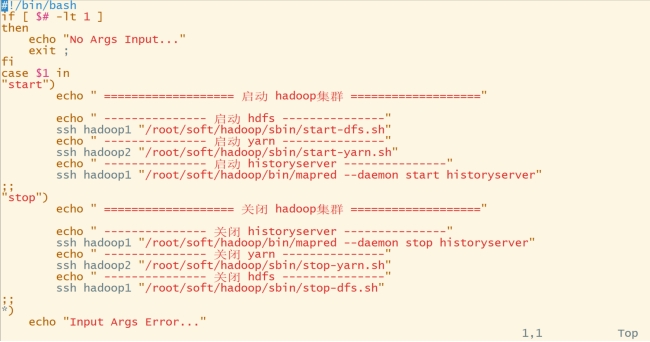

#编写hadoop群起脚本:

cd /root/binvim hdp.sh

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop1 "/root/soft/hadoop/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop2 "/root/soft/hadoop/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop1 "/root/soft/hadoop/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop1 "/root/soft/hadoop/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop2 "/root/soft/hadoop/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop1 "/root/soft/hadoop/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

#赋权限:

chmod 777 hdp.sh

#启动集群:

hdp.sh start

#查看进程是否缺少:

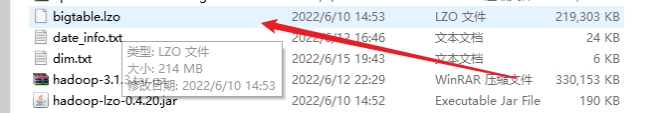

xcall.sh jps(8).LZO创建索引;

上传bigtable.lzo到hdfs:

hdfs dfs -put bigtable.lzo /input

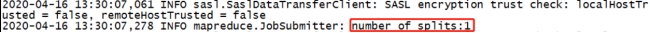

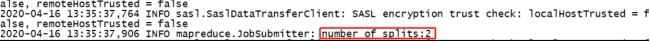

执行wordcount程序(如果执行不成功,hadoop配置就有问题,请重新配置):

hadoop jar /root/soft/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.job.inputformat.class=com.hadoop.mapreduce.LzoTextInputFormat /input /output1

执行成功后查看output1中是否有文件(没有文件就是执行失败):

hdfs dfs -ls /output1对bigtable.lzo文件索引:

hadoop jar /root/soft/hadoop/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /input/bigtable.lzo再次执行wordcount:

hadoop jar /root/soft/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.job.inputformat.class=com.hadoop.mapreduce.LzoTextInputFormat /input /output2

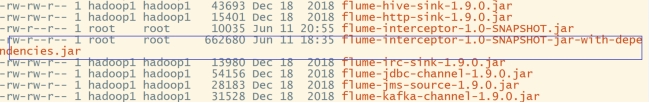

(9).日志采集Flume安装配置;

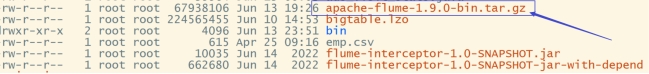

上传flume安装包并解压:

tar -zxvf apache-flume-1.9.0-bin.tar.gz -C /root/soft

切换到soft目录并重命名flume:cd /root/soft

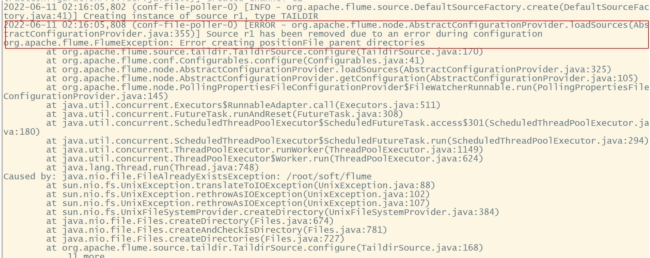

mv apache-flume-1.9.0-bin flume最好不要使用软连接,后面使用flume可能会报如下图错误:

删除flume的lib下的guava-11.0.2.jar:

cd flume/lib

rm guava-11.0.2.jar切换到conf目录:cd ..

修改flume-env.sh.template名为flume-env.sh并配置:

mv flume-env.sh.template flume-env.sh

vim flume-env.sh

export JAVA_HOME=/root/soft/jdk(10).配置采集日志的conf文件;

#切换到flume下的conf:

cd /root/soft/flume/conf

#创建file-flume-kafka.conf:

vim file-flume-kafka.conf#为各组件命名

a1.sources = r1

a1.channels = c1

#描述source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /root/soft/applog/log/app.*

a1.sources.r1.positionFile = /root/soft/flume/taildir_position.json

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.atguigu.flume.interceptor.ETLInterceptor$Builder

#描述channel

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = hadoop1:9092,hadoop2:9092

a1.channels.c1.kafka.topic = topic_log

a1.channels.c1.parseAsFlumeEvent = false

#绑定source和channel以及sink和channel的关系

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

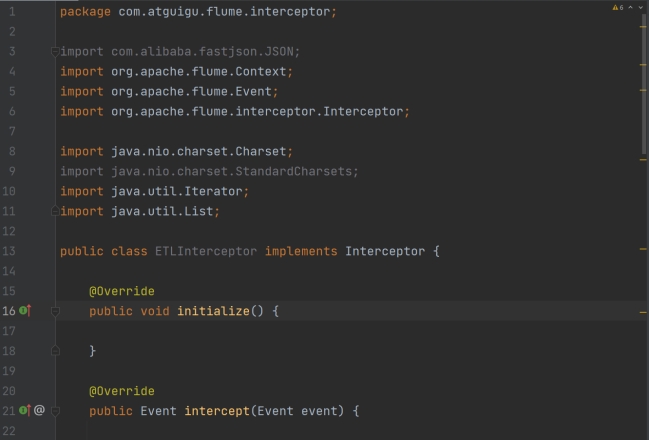

编写flume拦截器(展示部分代码):

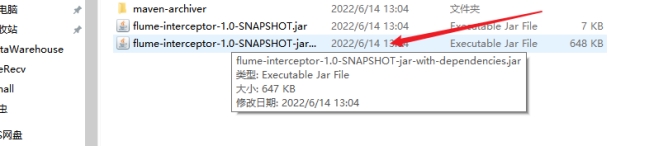

打jar包:

上传至flume的lib目录:

#切换到soft目录:

cd /root/soft

#分发flume:

xsync flume

#启动zookeeper和kafka:

zk.sh

kf.sh

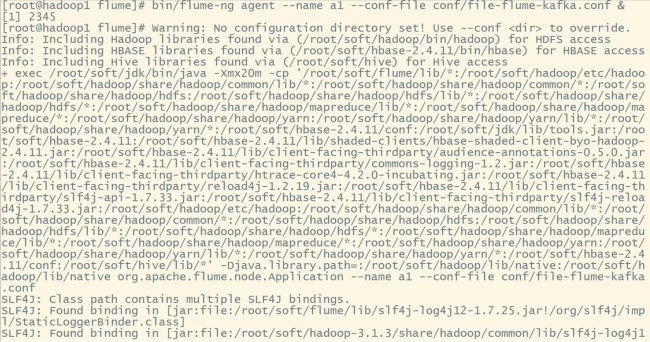

#在hadoop1和hadoop2上启动flume:

#切换到flume目录:

cd /root/soft/flume

# 启动

bin/flume-ng agent --name a1 --conf-file conf/file-flume-kafka.conf &

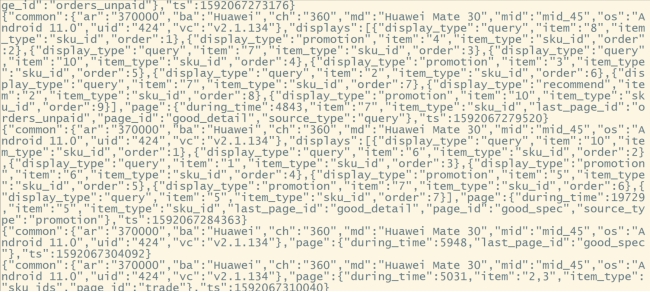

在hadoop3上的kafka中查看是否有数据存在,切换到kafka目录下:

bin/kafka-console-consumer.sh --bootstrap-server hadoop1:9092 --topic topic_log

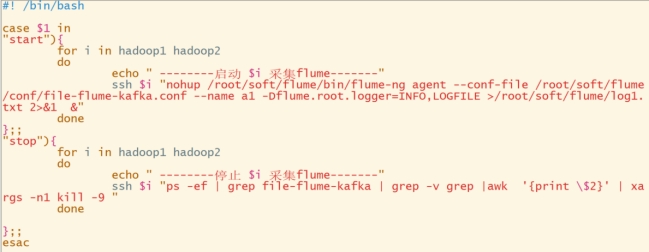

编写日志采集flume启停脚本:cd /root/bin

vim f1.sh

#! /bin/bash

case $1 in

"start"){

for i in hadoop1 hadoop2

do

echo " --------启动 $i 采集flume-------"

ssh $i "nohup /root/soft/flume/bin/flume-ng agent --conf-file /root/soft/flume/conf/file-flume-kafka.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/root/soft/flume/log1.txt 2>&1 &"

done

};;

"stop"){

for i in hadoop1 hadoop2

do

echo " --------停止 $i 采集flume-------"

ssh $i "ps -ef | grep file-flume-kafka | grep -v grep |awk '{print \$2}' | xargs -n1 kill -9 "

done

};;

esac

#赋权限:

chmod 777 f1.sh

#在hadoop3上flume的conf目录创建

vim kafka-flume-hdfs.conf

# 进入conf文件夹

cd /root/soft/flume/conf

#创建kafka-flume-hdfs.conf

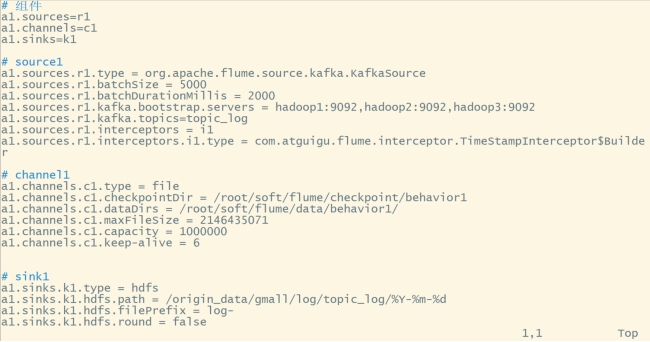

vim kafka-flume-hdfs.conf# 组件

a1.sources=r1

a1.channels=c1

a1.sinks=k1

# source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop1:9092,hadoop2:9092,hadoop3:9092

a1.sources.r1.kafka.topics=topic_log

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.atguigu.flume.interceptor.TimeStampInterceptor$Builder

# channel1

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /root/soft/flume/checkpoint/behavior1

a1.channels.c1.dataDirs = /root/soft/flume/data/behavior1/

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1000000

a1.channels.c1.keep-alive = 6

# sink1

a1.sinks.k1.type = hdfs

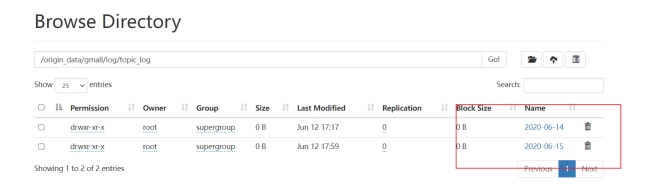

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_log/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = log-

a1.sinks.k1.hdfs.round = false

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

# 控制输出文件是原生文件。

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = lzop

# 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

编写日志消费flume启停脚本:vim f2.sh

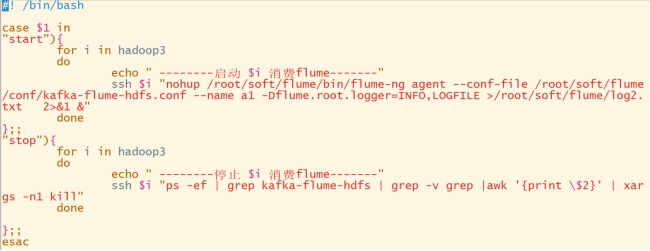

#! /bin/bash

case $1 in

"start"){

for i in hadoop3

do

echo " --------启动 $i 消费flume-------"

ssh $i "nohup /root/soft/flume/bin/flume-ng agent --conf-file /root/soft/flume/conf/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/root/soft/flume/log2.txt 2>&1 &"

done

};;

"stop"){

for i in hadoop3

do

echo " --------停止 $i 消费flume-------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

done

};;

esac

#赋权限:

chmod 777 f2.sh编写通道启脚本:

vim cluster.sh

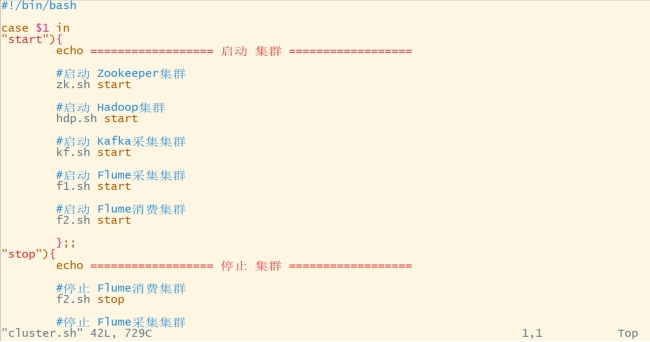

#!/bin/bash

case $1 in

"start"){

echo ================== 启动 集群 ==================

#启动 Zookeeper集群

zk.sh start

#启动 Hadoop集群

hdp.sh start

#启动 Kafka采集集群

kf.sh start

#启动 Flume采集集群

f1.sh start

#启动 Flume消费集群

f2.sh start

};;

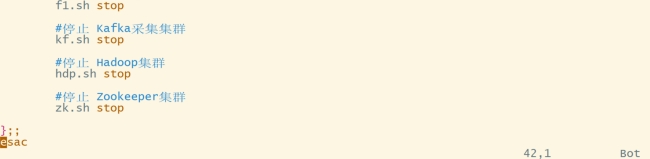

"stop"){

echo ================== 停止 集群 ==================

#停止 Flume消费集群

f2.sh stop

#停止 Flume采集集群

f1.sh stop

#停止 Kafka采集集群

kf.sh stop

#停止 Hadoop集群

hdp.sh stop

#停止 Zookeeper集群

zk.sh stop

};;

esac

#赋权限:

chmod 777 cluster.sh

#启动日志采集通道:

cluster.sh start

#执行lg.sh:

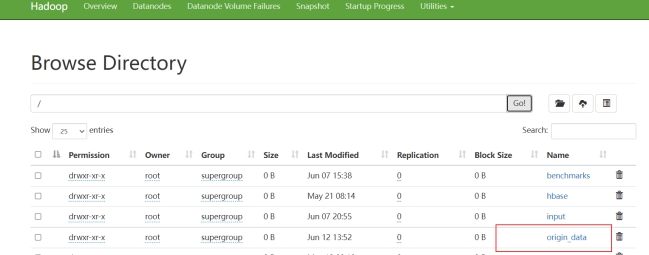

lg.sh查看网页端是否有数据存在:hadoop1:9870

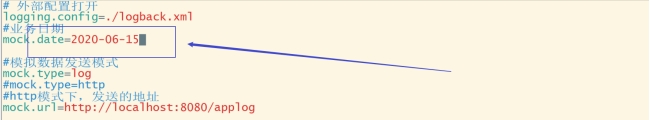

切换到applog修改application.properties中的业务日期为15:

cd /root/soft/applog/

vim application.properties

执行lg.sh:lg.sh

观察网页端是否有数据存在:

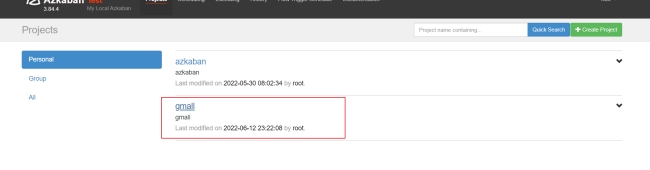

2.业务数据采集平台;

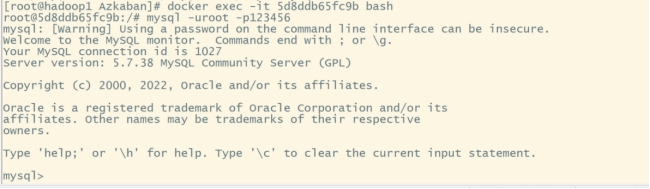

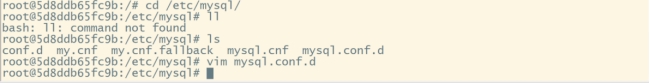

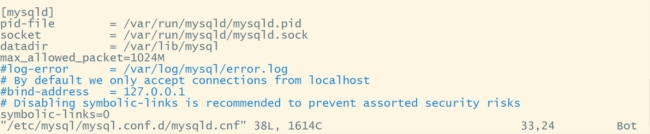

(1).安装mysql(参考如下网址);

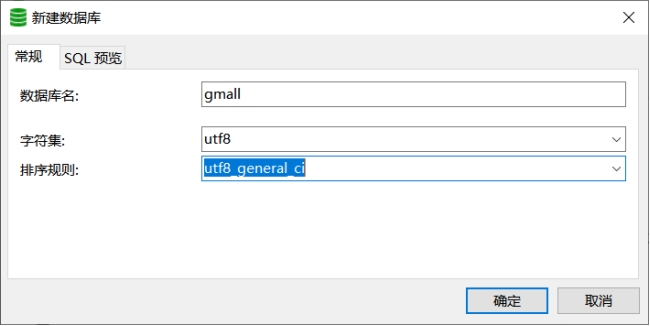

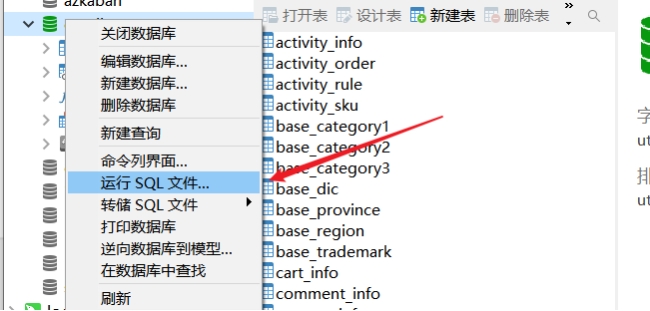

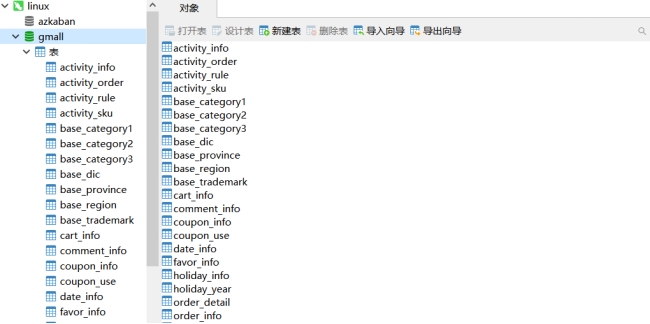

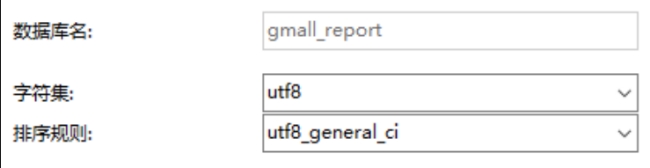

(2).连接navicat并创建gmall数据库:

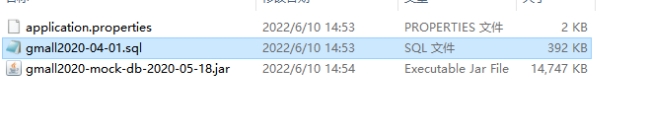

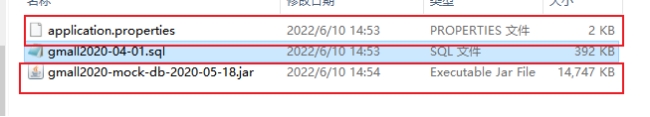

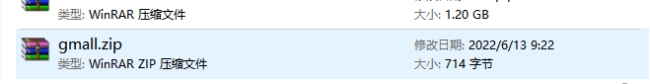

生成业务数据,在soft目录下创建db_log目录;

mkdir /root/soft/db_log上传gmall2020-mock-db-2020-05-18.jar、application.properties到db_log;

修改application.properties配置:vim application.properties

mock.date=2020-06-14

mock.clear=1执行命令生成2020-06-14日期数据:

java -jar gmall2020-mock-db-2020-05-18.jar 修改application.properties配置文件,重新执行命令生成2020-06-15日期数据:

mock.date=2020-06-15

mock.clear=0

java -jar gmall2020-mock-db-2020-05-18.jar (3).sqoop安装;

上传sqoop安装包并解压:

tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /root/soft

#创建软连接:

ln -s sqoop-1.4.7.bin__hadoop-2.6.0 sqoop进入sqoop/conf目录,重命名sqoop-env-template.sh为sqoop-env.sh

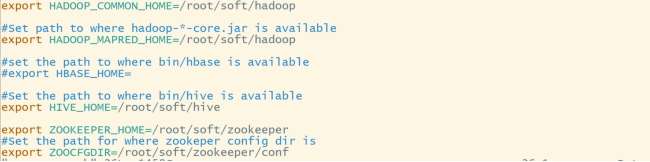

mv sqoop-env-template.sh sqoop-env.sh并修改配置文件:cd /root/soft/sqoop/conf

vim sqoop-env.sh修改:

export HADOOP_COMMON_HOME=/root/soft/hadoop

export HADOOP_MAPRED_HOME=/root/soft/hadoop

export HIVE_HOME=/root/soft/hive

export ZOOKEEPER_HOME=/root/soft/zookeeper

export ZOOCFGDIR=/root/soft/zookeeper/conf

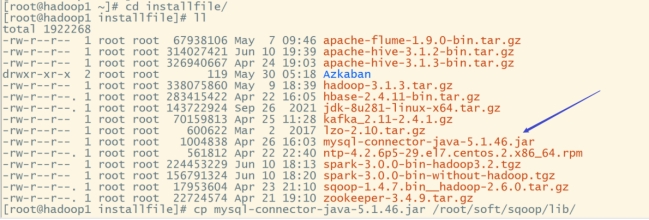

拷贝JDBC驱动,上传驱动并拷贝到sqoop的lib下:

cp mysql-connector-java-5.1.46.jar /root/soft/sqoop/lib

#验证sqoop,进入到sqoop目录:

cd /root/soft/sqoop

#执行sqoop命令:

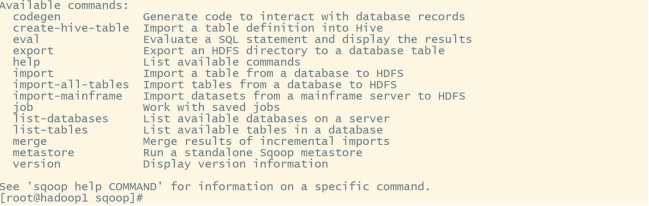

bin/sqoop help

#测试sqoop是否能够成功连接数据库:

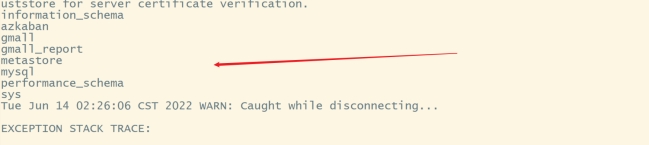

bin/sqoop list-databases --connect jdbc:mysql://hadoop1:3306/ --username root --password 123456

(4).业务数据导入HDFS;

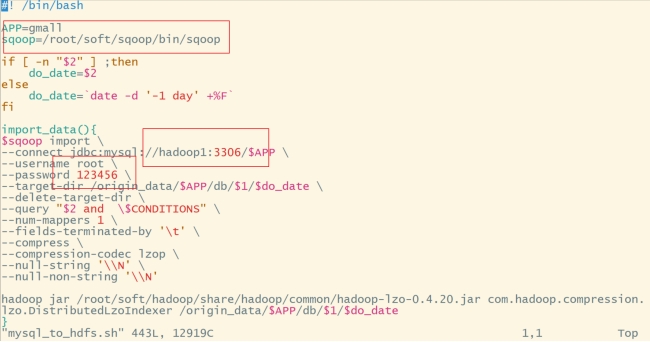

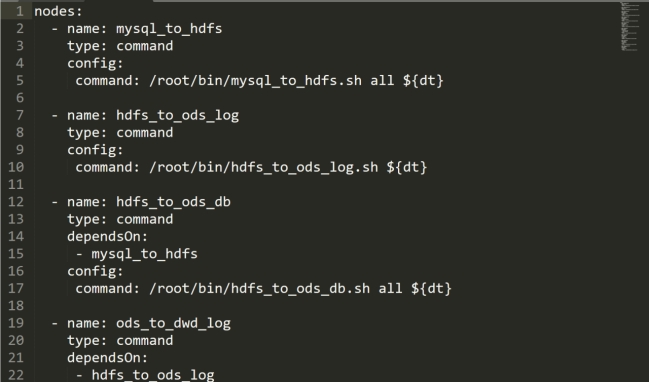

在root/bin下编写mysql_to_hdfs.sh脚本(展示部分需要修改的代码):vim mysql_to_hdfs.sh

#赋权限:

chmod 777 mysql_to_hdfs.sh

#初次导入:

mysql_to_hdfs.sh first 2020-06-14

#每日导入:

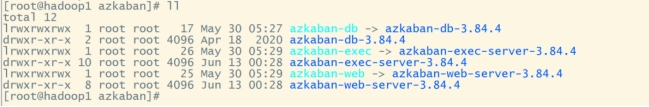

mysql_to_hdfs.sh all 2020-06-15(5).安装hive(只能安装尚硅谷提供的hive-3.1.2);

上传jar包,并解压:

tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /root/soft

# 进入soft目录

cd /root/soft

#创建软连接:

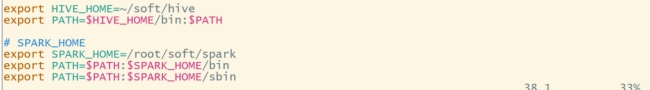

ln -s apache-hive-3.1.2-bin hive添加环境变量hive、spark:vim ~/.bashrc

export HIVE_HOME=~/soft/hive

export PATH=$HIVE_HOME/bin:$PATH

export SPARK_HOME=/root/soft/spark

export PATH=$PATH:$SPARK_HOME/bin

export PATH=$PATH:$SPARK_HOME/sbin

使环境变量生效:source ~/.bashrc

解决/root/soft/hive/lib下的日志jar包冲突:

mv log4j-slf4j-impl-2.10.0.jar log4j-slf4j-impl-2.10.0.jar.bak拷贝mysql驱动到/root/soft/hive/lib目录下:

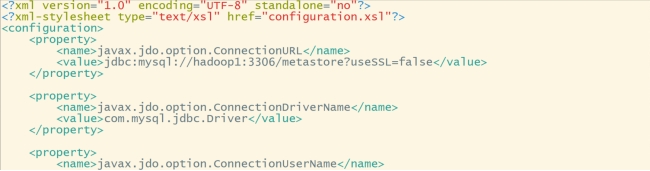

cp mysql-connector-java-5.1.46.jar /root/soft/hive/lib配置Metastore到mysql,在/root/soft/hive/conf目录下创建hive-site.xml,添加如下内容(截取部分代码):

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop1:3306/metastore?useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

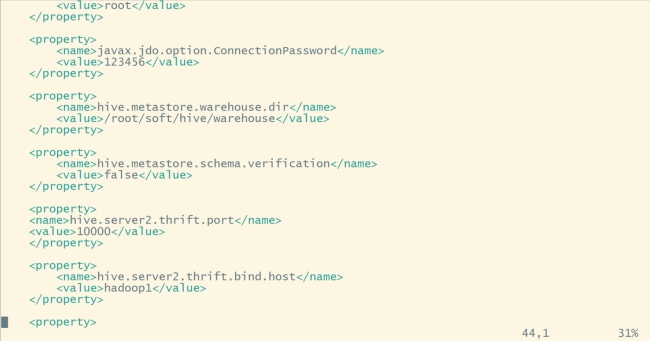

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/root/soft/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop1</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<!--Spark依赖位置(注意:端口号8020必须和namenode的端口号一致)-->

<property>

<name>spark.yarn.jars</name>

<value>hdfs://hadoop1:8020/spark-jars/*</value>

</property>

<!--Hive执行引擎-->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

<!--Hive和Spark连接超时时间-->

<property>

<name>hive.spark.client.connect.timeout</name>

<value>10000ms</value>

</property>

</configuration>

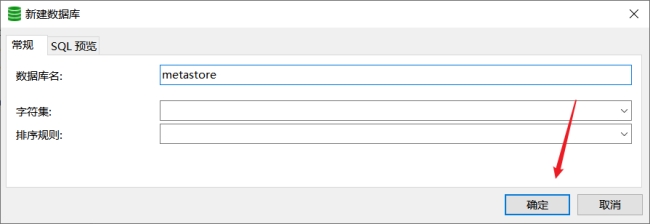

初始化元数据库,创建mysql数据库metastore:

初始化命令: schematool -initSchema -dbType mysql -verbose

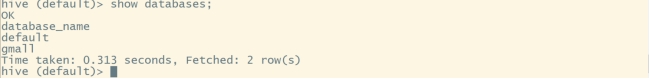

启动hive客户端:hive

查看数据库:show databases;

(6)配置Yarn容量调度器多队列

1,修改容量调度器配置文件

默认Yarn的配置下,容量调度器只有一条default队列。在capacity-scheduler.xml中可以配置多条队列,\修改**以下属性,增加hive队列。

<property>

<name>yarn.scheduler.capacity.root.queues</name>

<value>default,hive</value>

<description>

再增加一个hive队列

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.capacity</name>

<value>50</value>

<description>

default队列的容量为50%

</description>

</property>同时为新加队列\添加**必要属性:

<property>

<name>yarn.scheduler.capacity.root.hive.capacity</name>

<value>50</value>

<description>

hive队列的容量为50%

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.user-limit-factor</name>

<value>1</value>

<description>

一个用户最多能够获取该队列资源容量的比例,取值0-1

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-capacity</name>

<value>80</value>

<description>

hive队列的最大容量(自己队列资源不够,可以使用其他队列资源上限)

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.state</name>

<value>RUNNING</value>

<description>

开启hive队列运行,不设置队列不能使用

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_submit_applications</name>

<value>*</value>

<description>

访问控制,控制谁可以将任务提交到该队列,*表示任何人

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_administer_queue</name>

<value>*</value>

<description>

访问控制,控制谁可以管理(包括提交和取消)该队列的任务,*表示任何人

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_application_max_priority</name>

<value>*</value>

<description>

指定哪个用户可以提交配置任务优先级

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-application-lifetime</name>

<value>-1</value>

<description>

hive队列中任务的最大生命时长,以秒为单位。任何小于或等于零的值将被视为禁用。

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.hive.default-application-lifetime</name>

<value>-1</value>

<description>

hive队列中任务的默认生命时长,以秒为单位。任何小于或等于零的值将被视为禁用。

</description>

</property>2、分发配置文件

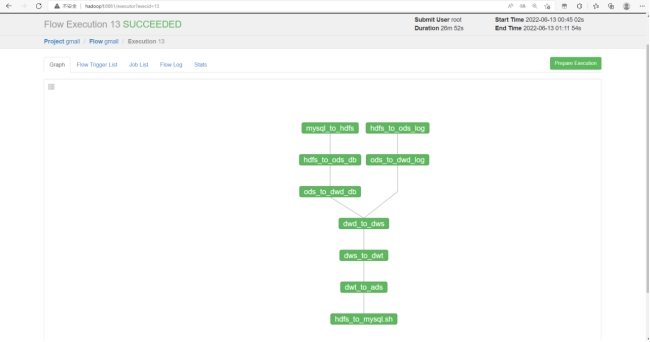

xsync /root/soft/hadoop/etc/hadoop/capacity-scheduler.xml3.数据仓库系统;

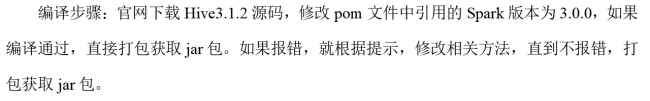

(1).部署hive on spark;

上传spark安装包(必须使用尚硅谷提供的安装包一个包为纯净版)并解压:

tar -zxvf spark-3.0.0-bin-hadoop3.2.tgz -C /root/soft

tar -zxvf spark-3.0.0-bin-without-hadoop.tgz -C /root/soft

# 进入soft目录

cd /root/soft

#创建软连接:

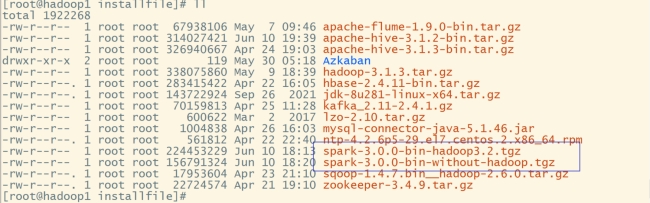

ln -s spark-3.0.0-bin-hadoop3.2 spark在hive中创建spark配置文件:

vim /root/soft/hive/conf/spark-defaults.conf

spark.master yarn

spark.eventLog.enabled true

spark.eventLog.dir hdfs://hadoop1:8020/spark-history

spark.executor.memory 1g

spark.driver.memory 1g

在HDFS创建如下路径:

hdfs dfs -mkdir /spark-history

hdfs dfs -mkdir /spark-jars上传spark纯净版jar包到hdfs

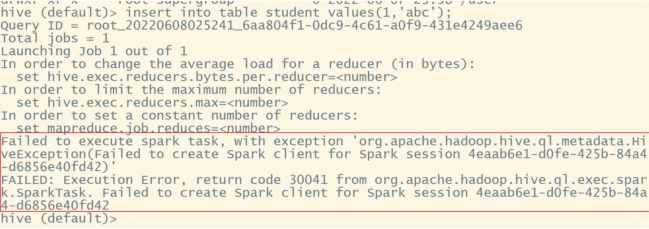

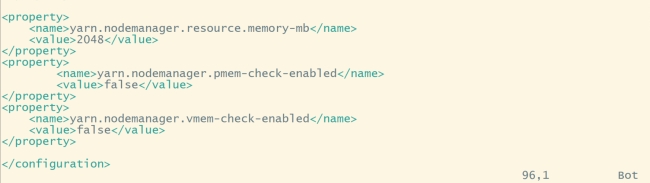

hdfs dfs -put /root/soft/spark-3.0.0-bin-without-hadoop/jars/* /spark-jars运行hive on spark之前需要更改,否则会报以下错误:

在/root/soft/hadoop/etc/hadoop/yarn-site.xml中配置:

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

同步到hadoop2、hadoop3:

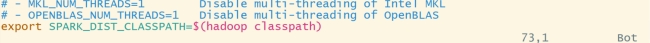

xsync yarn-site.xml进入/root/soft/spark/conf

将spark-env.sh.template 复制一份:

cp spark-env.sh.template spark-env.sh然后在spark-env.sh中配置:

export SPARK_DIST_CLASSPATH=$(hadoop classpath)

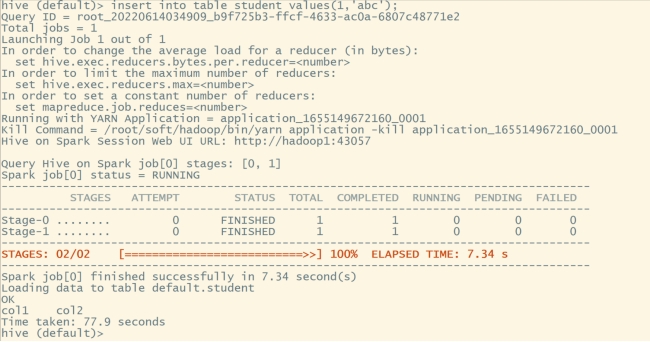

启动hive客户端,并进行hive on spark测试;

#启动hive客户端命令:

hive

#创建表:

create table student(id int, name string);

#插入数据:

insert into table student values(1,'abc');

上图表示spark测试成功;

创建gmall数据库:create database gmall;

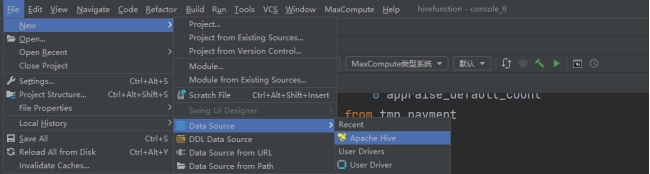

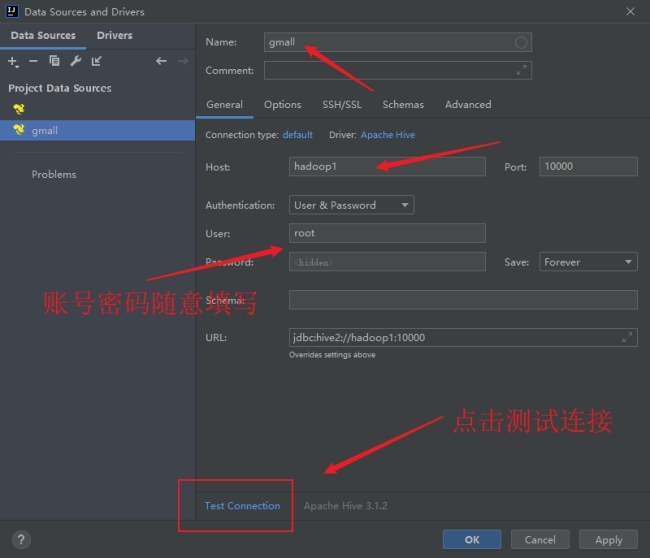

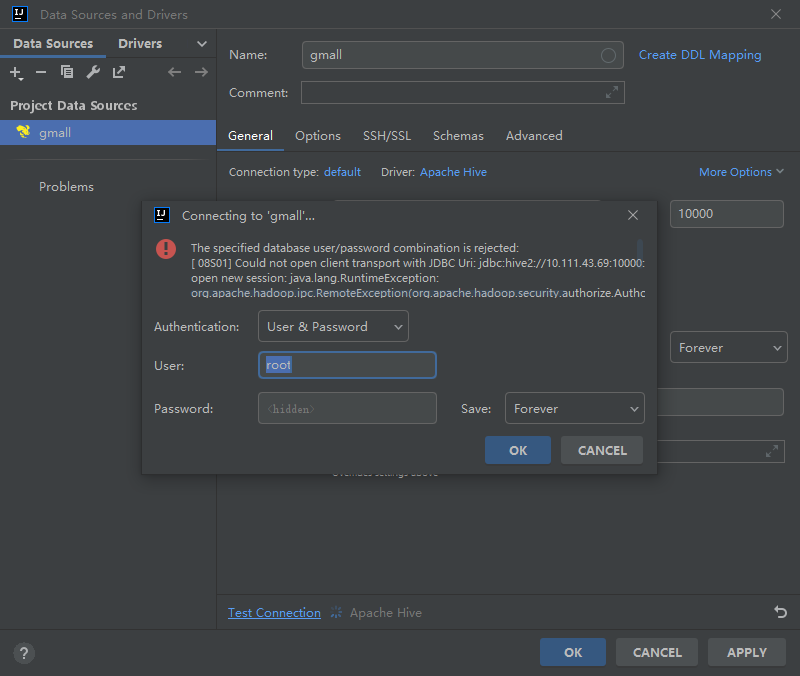

(2).启动hiveserver2,连接idea,

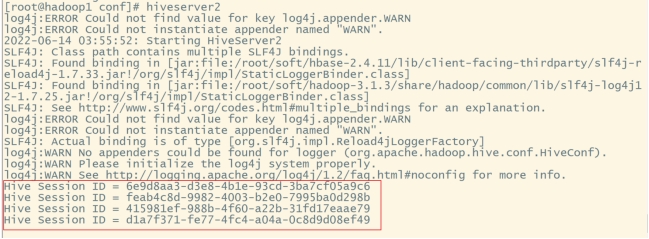

启动命令:hiveserver2

等待跑出4个session,连接idea:

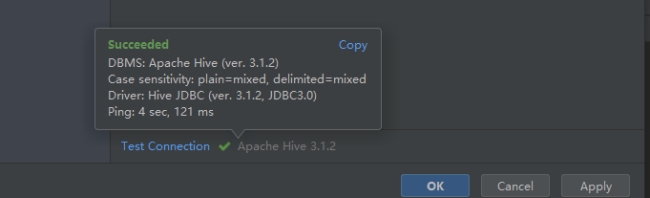

测试连接:

连接成功就可以使用了。

如果出现:

则需要:

修改 $HADOOP_HOME/etc/hadoop/core-site.xml 配置文件,添加如下配置:

<!--远程登录 hive -->

<!--下面的 hadoop 是用户名和用户组,用自己的就可以-->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>分发core-site.xml

xsync /root/soft/hadoop/etc/hadoop/core-site.xml重启hadoop集群:hdp.sh stop

hdp.sh start重新测试连接

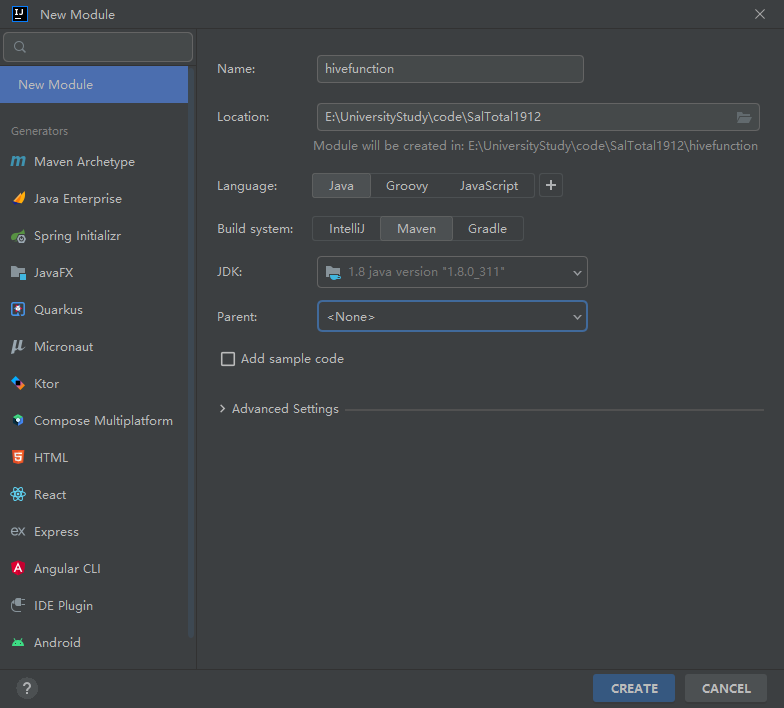

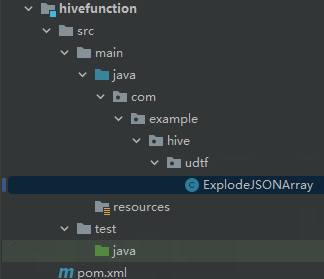

(3).创建UDTF函数;

创建一个maven工程:hivefunction

在pom.xml 添加hive依赖

<dependencies>

<!--添加hive依赖-->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>3.1.2</version>

</dependency>

</dependencies>创建ExplodeJSONArray类:

package com.example.hive.udtf;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.json.JSONArray;

import java.util.ArrayList;

import java.util.List;

/**

* @author 成大事

* @since 2022/6/18 17:23

*/

public class ExplodeJSONArray extends GenericUDTF {

@Override

public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {

// 1 参数合法性检查

if (argOIs.getAllStructFieldRefs().size() != 1){

throw new UDFArgumentException("ExplodeJSONArray 只需要一个参数");

}

// 2 第一个参数必须为string

if(!"string".equals(argOIs.getAllStructFieldRefs().get(0).getFieldObjectInspector().getTypeName())){

throw new UDFArgumentException("json_array_to_struct_array的第1个参数应为string类型");

}

// 3 定义返回值名称和类型

List<String> fieldNames = new ArrayList<String>();

List<ObjectInspector> fieldOIs = new ArrayList<ObjectInspector>();

fieldNames.add("items");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

public void process(Object[] objects) throws HiveException {

// 1 获取传入的数据

String jsonArray = objects[0].toString();

// 2 将string转换为json数组

JSONArray actions = new JSONArray(jsonArray);

// 3 循环一次,取出数组中的一个json,并写出

for (int i = 0; i < actions.length(); i++) {

String[] result = new String[1];

result[0] = actions.getString(i);

forward(result);

}

}

public void close() throws HiveException {

}

}在HDFS 创建/user/hive/jars 文件夹

hdfs dfs -mkdir -p /user/hive/jars打包上传到HDFS的/user/hive/jars路径下:

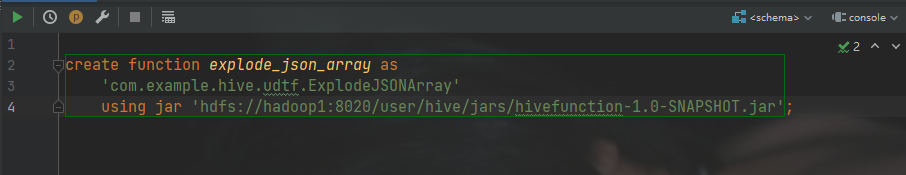

hdfs dfs -put hivefunction-1.0-SNAPSHOT.jar /user/hive/jars刚才idea已经连接了hive:

先使用use gmall :

然后在hive里面创建永久函数:

create function explode_json_array as 'com.example.hive.udtf.ExplodeJSONArray' using jar 'hdfs://hadoop1:8020/user/hive/jars/hivefunction-1.0-SNAPSHOT.jar';

(4).建ODS层(用户行为数据)表;

drop table if exists ods_log;

CREATE EXTERNAL TABLE ods_log (`line` string)

PARTITIONED BY (`dt` string) -- 按照时间创建分区

STORED AS -- 指定存储方式,读数据采用LzoTextInputFormat;

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_log' -- 指定数据在hdfs上的存储位置

;

-- 加载数据

load data inpath '/origin_data/gmall/log/topic_log/2020-06-14' into table ods_log partition(dt='2020-06-14');

-- 查看是否加载成功

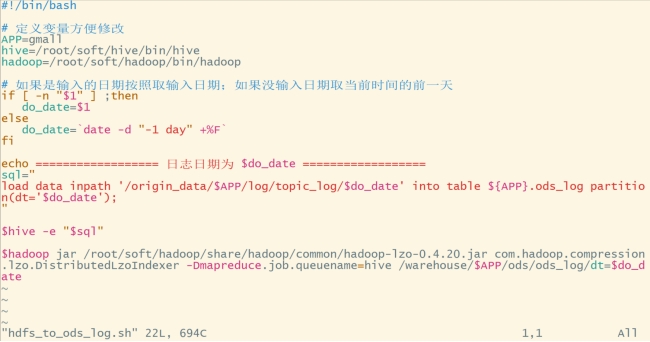

select * from ods_log limit 2;在/root/bin下创建脚本:vim hdfs_to_ods_log.sh

#!/bin/bash

# 定义变量方便修改

APP=gmall

hive=/root/soft/hive/bin/hive

hadoop=/root/soft/hadoop/bin/hadoop

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

echo ================== 日志日期为 $do_date ==================

sql="

load data inpath '/origin_data/$APP/log/topic_log/$do_date' into table ${APP}.ods_log partition(dt='$do_date');

"

$hive -e "$sql"

$hadoop jar /root/soft/hadoop/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer -Dmapreduce.job.queuename=hive /warehouse/$APP/ods/ods_log/dt=$do_date

#赋权限:

chmod 777 hdfs_to_ods_log.sh

#使用脚本:

hdfs_to_ods_log.sh 2020-06-15查看数据:

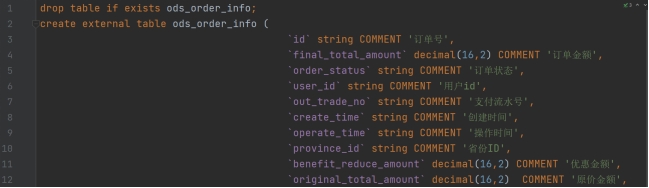

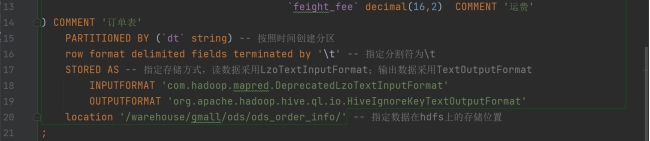

select * from ods_log where dt='2020-06-15' limit 2;创建ODS层业务数据表,仅展示部分sql代码;

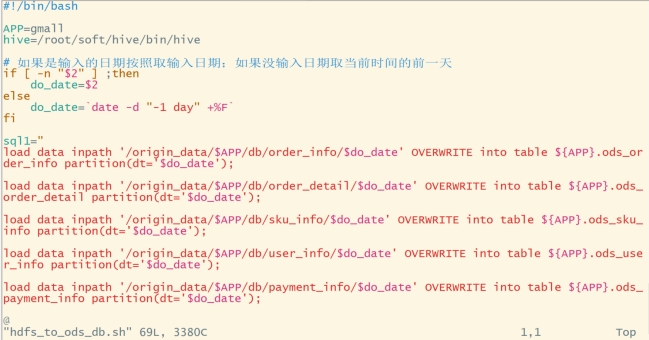

创建ODS数据加载脚本:vim hdfs_to_ods_db.sh

#!/bin/bash

APP=gmall

hive=/root/soft/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

sql1="

load data inpath '/origin_data/$APP/db/order_info/$do_date' OVERWRITE into table ${APP}.ods_order_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_detail/$do_date' OVERWRITE into table ${APP}.ods_order_detail partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/sku_info/$do_date' OVERWRITE into table ${APP}.ods_sku_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/user_info/$do_date' OVERWRITE into table ${APP}.ods_user_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/payment_info/$do_date' OVERWRITE into table ${APP}.ods_payment_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category1/$do_date' OVERWRITE into table ${APP}.ods_base_category1 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category2/$do_date' OVERWRITE into table ${APP}.ods_base_category2 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category3/$do_date' OVERWRITE into table ${APP}.ods_base_category3 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_trademark/$do_date' OVERWRITE into table ${APP}.ods_base_trademark partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_info/$do_date' OVERWRITE into table ${APP}.ods_activity_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_order/$do_date' OVERWRITE into table ${APP}.ods_activity_order partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/cart_info/$do_date' OVERWRITE into table ${APP}.ods_cart_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/comment_info/$do_date' OVERWRITE into table ${APP}.ods_comment_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/coupon_info/$do_date' OVERWRITE into table ${APP}.ods_coupon_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/coupon_use/$do_date' OVERWRITE into table ${APP}.ods_coupon_use partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/favor_info/$do_date' OVERWRITE into table ${APP}.ods_favor_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_refund_info/$do_date' OVERWRITE into table ${APP}.ods_order_refund_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_status_log/$do_date' OVERWRITE into table ${APP}.ods_order_status_log partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/spu_info/$do_date' OVERWRITE into table ${APP}.ods_spu_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_rule/$do_date' OVERWRITE into table ${APP}.ods_activity_rule partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_dic/$do_date' OVERWRITE into table ${APP}.ods_base_dic partition(dt='$do_date');

"

sql2="

load data inpath '/origin_data/$APP/db/base_province/$do_date' OVERWRITE into table ${APP}.ods_base_province;

load data inpath '/origin_data/$APP/db/base_region/$do_date' OVERWRITE into table ${APP}.ods_base_region;

"

case $1 in

"first"){

$hive -e "$sql1$sql2"

};;

"all"){

$hive -e "$sql1"

};;

esac

#赋权限:

chmod 777 hdfs_to_ods_db.sh

#运行脚本加载数据初次导入:

hdfs_to_ods_db.sh first 2020-06-14

#运行脚本加载数据每日导入:

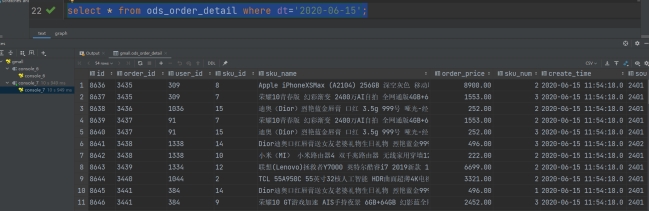

hdfs_to_ods_db.sh all 2020-06-15测试是否成功导入数据:

select * from ods_order_detail where dt='2020-06-15';

(5).建DWD层(用户行为日志解析)表;

仅展示部分sql代码,创建加载数据脚本:vim ods_to_dwd_log.sh

#!/bin/bash

hive=/root/soft/hive/bin/hive

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

SET mapreduce.job.queuename=hive;

SET hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_start_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.start') is not null;

insert overwrite table ${APP}.dwd_action_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.sourceType'),

get_json_object(action,'$.action_id'),

get_json_object(action,'$.item'),

get_json_object(action,'$.item_type'),

get_json_object(action,'$.ts')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.actions')) tmp as action

where dt='$do_date'

and get_json_object(line,'$.actions') is not null;

insert overwrite table ${APP}.dwd_display_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.sourceType'),

get_json_object(line,'$.ts'),

get_json_object(display,'$.displayType'),

get_json_object(display,'$.item'),

get_json_object(display,'$.item_type'),

get_json_object(display,'$.order')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.displays')) tmp as display

where dt='$do_date'

and get_json_object(line,'$.displays') is not null;

insert overwrite table ${APP}.dwd_page_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.sourceType'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.page') is not null;

insert overwrite table ${APP}.dwd_error_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.sourceType'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.actions'),

get_json_object(line,'$.displays'),

get_json_object(line,'$.ts'),

get_json_object(line,'$.err.error_code'),

get_json_object(line,'$.err.msg')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.err') is not null;

"

$hive -e "$sql"

#赋权限:

chmod 777 ods_to_dwd_log.sh

#执行脚本加载数据:

ods_to_dwd_log.sh 2020-06-15查询导入结果:

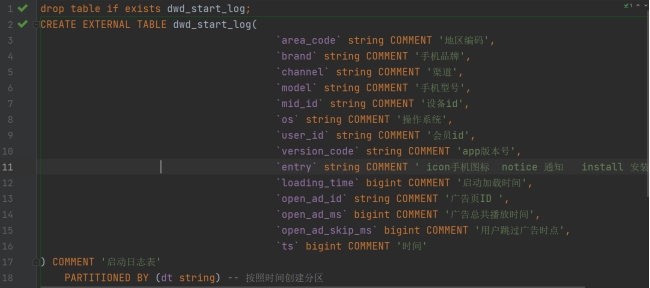

select * from dwd_start_log where dt='2020-06-15' limit 2;DWD层业务数据表,仅展示部分sql代码;

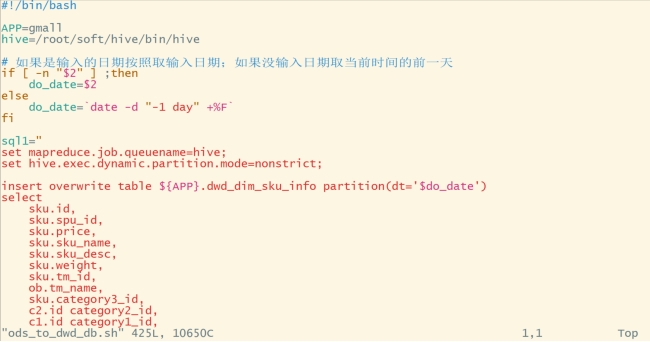

创建DWD数据加载脚本:vim ods_to_dwd_db.sh

#!/bin/bash

APP=gmall

hive=/root/soft/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

sql1="

set mapreduce.job.queuename=hive;

set hive.exec.dynamic.partition.mode=nonstrict;

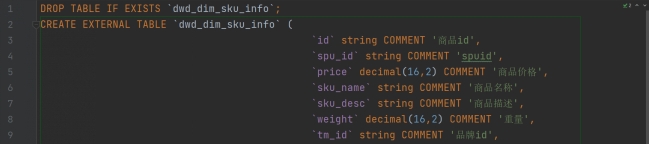

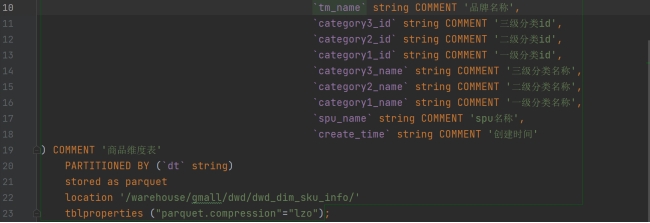

insert overwrite table ${APP}.dwd_dim_sku_info partition(dt='$do_date')

select

sku.id,

sku.spu_id,

sku.price,

sku.sku_name,

sku.sku_desc,

sku.weight,

sku.tm_id,

ob.tm_name,

sku.category3_id,

c2.id category2_id,

c1.id category1_id,

c3.name category3_name,

c2.name category2_name,

c1.name category1_name,

spu.spu_name,

sku.create_time

from

(

select * from ${APP}.ods_sku_info where dt='$do_date'

)sku

join

(

select * from ${APP}.ods_base_trademark where dt='$do_date'

)ob on sku.tm_id=ob.tm_id

join

(

select * from ${APP}.ods_spu_info where dt='$do_date'

)spu on spu.id = sku.spu_id

join

(

select * from ${APP}.ods_base_category3 where dt='$do_date'

)c3 on sku.category3_id=c3.id

join

(

select * from ${APP}.ods_base_category2 where dt='$do_date'

)c2 on c3.category2_id=c2.id

join

(

select * from ${APP}.ods_base_category1 where dt='$do_date'

)c1 on c2.category1_id=c1.id;

insert overwrite table ${APP}.dwd_dim_coupon_info partition(dt='$do_date')

select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

spu_id,

tm_id,

category3_id,

limit_num,

operate_time,

expire_time

from ${APP}.ods_coupon_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_dim_activity_info partition(dt='$do_date')

select

id,

activity_name,

activity_type,

start_time,

end_time,

create_time

from ${APP}.ods_activity_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_fact_order_detail partition(dt='$do_date')

select

id,

order_id,

user_id,

sku_id,

sku_num,

order_price,

sku_num,

create_time,

province_id,

source_type,

source_id,

original_amount_d,

if(rn=1,final_total_amount-(sum_div_final_amount-final_amount_d),final_amount_d),

if(rn=1,feight_fee-(sum_div_feight_fee-feight_fee_d),feight_fee_d),

if(rn=1,benefit_reduce_amount-(sum_div_benefit_reduce_amount-benefit_reduce_amount_d),benefit_reduce_amount_d)

from

(

select

od.id,

od.order_id,

od.user_id,

od.sku_id,

od.sku_name,

od.order_price,

od.sku_num,

od.create_time,

oi.province_id,

od.source_type,

od.source_id,

round(od.order_price*od.sku_num,2) original_amount_d,

round(od.order_price*od.sku_num/oi.original_total_amount*oi.final_total_amount,2) final_amount_d,

round(od.order_price*od.sku_num/oi.original_total_amount*oi.feight_fee,2) feight_fee_d,

round(od.order_price*od.sku_num/oi.original_total_amount*oi.benefit_reduce_amount,2) benefit_reduce_amount_d,

row_number() over(partition by od.order_id order by od.id desc) rn,

oi.final_total_amount,

oi.feight_fee,

oi.benefit_reduce_amount,

sum(round(od.order_price*od.sku_num/oi.original_total_amount*oi.final_total_amount,2)) over(partition by od.order_id) sum_div_final_amount,

sum(round(od.order_price*od.sku_num/oi.original_total_amount*oi.feight_fee,2)) over(partition by od.order_id) sum_div_feight_fee,

sum(round(od.order_price*od.sku_num/oi.original_total_amount*oi.benefit_reduce_amount,2)) over(partition by od.order_id) sum_div_benefit_reduce_amount

from

(

select * from ${APP}.ods_order_detail where dt='$do_date'

) od

join

(

select * from ${APP}.ods_order_info where dt='$do_date'

) oi

on od.order_id=oi.id

)t1;

insert overwrite table ${APP}.dwd_fact_payment_info partition(dt='$do_date')

select

pi.id,

pi.out_trade_no,

pi.order_id,

pi.user_id,

pi.alipay_trade_no,

pi.total_amount,

pi.subject,

pi.payment_type,

pi.payment_time,

oi.province_id

from

(

select * from ${APP}.ods_payment_info where dt='$do_date'

)pi

join

(

select id, province_id from ${APP}.ods_order_info where dt='$do_date'

)oi

on pi.order_id = oi.id;

insert overwrite table ${APP}.dwd_fact_order_refund_info partition(dt='$do_date')

select

id,

user_id,

order_id,

sku_id,

refund_type,

refund_num,

refund_amount,

refund_reason_type,

create_time

from ${APP}.ods_order_refund_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_fact_comment_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from ${APP}.ods_comment_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_fact_cart_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

cart_price,

sku_num,

sku_name,

create_time,

operate_time,

is_ordered,

order_time,

source_type,

source_id

from ${APP}.ods_cart_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_fact_favor_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from ${APP}.ods_favor_info

where dt='$do_date';

insert overwrite table ${APP}.dwd_fact_coupon_use partition(dt)

select

if(new.id is null,old.id,new.id),

if(new.coupon_id is null,old.coupon_id,new.coupon_id),

if(new.user_id is null,old.user_id,new.user_id),

if(new.order_id is null,old.order_id,new.order_id),

if(new.coupon_status is null,old.coupon_status,new.coupon_status),

if(new.get_time is null,old.get_time,new.get_time),

if(new.using_time is null,old.using_time,new.using_time),

if(new.used_time is null,old.used_time,new.used_time),

date_format(if(new.get_time is null,old.get_time,new.get_time),'yyyy-MM-dd')

from

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time

from ${APP}.dwd_fact_coupon_use

where dt in

(

select

date_format(get_time,'yyyy-MM-dd')

from ${APP}.ods_coupon_use

where dt='$do_date'

)

)old

full outer join

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time

from ${APP}.ods_coupon_use

where dt='$do_date'

)new

on old.id=new.id;

insert overwrite table ${APP}.dwd_fact_order_info partition(dt)

select

if(new.id is null,old.id,new.id),

if(new.order_status is null,old.order_status,new.order_status),

if(new.user_id is null,old.user_id,new.user_id),

if(new.out_trade_no is null,old.out_trade_no,new.out_trade_no),

if(new.tms['1001'] is null,old.create_time,new.tms['1001']),--1001对应未支付状态

if(new.tms['1002'] is null,old.payment_time,new.tms['1002']),

if(new.tms['1003'] is null,old.cancel_time,new.tms['1003']),

if(new.tms['1004'] is null,old.finish_time,new.tms['1004']),

if(new.tms['1005'] is null,old.refund_time,new.tms['1005']),

if(new.tms['1006'] is null,old.refund_finish_time,new.tms['1006']),

if(new.province_id is null,old.province_id,new.province_id),

if(new.activity_id is null,old.activity_id,new.activity_id),

if(new.original_total_amount is null,old.original_total_amount,new.original_total_amount),

if(new.benefit_reduce_amount is null,old.benefit_reduce_amount,new.benefit_reduce_amount),

if(new.feight_fee is null,old.feight_fee,new.feight_fee),

if(new.final_total_amount is null,old.final_total_amount,new.final_total_amount),

date_format(if(new.tms['1001'] is null,old.create_time,new.tms['1001']),'yyyy-MM-dd')

from

(

select

id,

order_status,

user_id,

out_trade_no,

create_time,

payment_time,

cancel_time,

finish_time,

refund_time,

refund_finish_time,

province_id,

activity_id,

original_total_amount,

benefit_reduce_amount,

feight_fee,

final_total_amount

from ${APP}.dwd_fact_order_info

where dt

in

(

select

date_format(create_time,'yyyy-MM-dd')

from ${APP}.ods_order_info

where dt='$do_date'

)

)old

full outer join

(

select

info.id,

info.order_status,

info.user_id,

info.out_trade_no,

info.province_id,

act.activity_id,

log.tms,

info.original_total_amount,

info.benefit_reduce_amount,

info.feight_fee,

info.final_total_amount

from

(

select

order_id,

str_to_map(concat_ws(',',collect_set(concat(order_status,'=',operate_time))),',','=') tms

from ${APP}.ods_order_status_log

where dt='$do_date'

group by order_id

)log

join

(

select * from ${APP}.ods_order_info where dt='$do_date'

)info

on log.order_id=info.id

left join

(

select * from ${APP}.ods_activity_order where dt='$do_date'

)act

on log.order_id=act.order_id

)new

on old.id=new.id;

"

sql2="

insert overwrite table ${APP}.dwd_dim_base_province

select

bp.id,

bp.name,

bp.area_code,

bp.iso_code,

bp.region_id,

br.region_name

from ${APP}.ods_base_province bp

join ${APP}.ods_base_region br

on bp.region_id=br.id;

"

sql3="

insert overwrite table ${APP}.dwd_dim_user_info_his_tmp

select * from

(

select

id,

name,

birthday,

gender,

email,

user_level,

create_time,

operate_time,

'$do_date' start_date,

'9999-99-99' end_date

from ${APP}.ods_user_info where dt='$do_date'

union all

select

uh.id,

uh.name,

uh.birthday,

uh.gender,

uh.email,

uh.user_level,

uh.create_time,

uh.operate_time,

uh.start_date,

if(ui.id is not null and uh.end_date='9999-99-99', date_add(ui.dt,-1), uh.end_date) end_date

from ${APP}.dwd_dim_user_info_his uh left join

(

select

*

from ${APP}.ods_user_info

where dt='$do_date'

) ui on uh.id=ui.id

)his

order by his.id, start_date;

insert overwrite table ${APP}.dwd_dim_user_info_his

select * from ${APP}.dwd_dim_user_info_his_tmp;

"

case $1 in

"first"){

$hive -e "$sql1$sql2"

};;

"all"){

$hive -e "$sql1$sql3"

};;

esac

#赋权限:

chmod 777 ods_to_dwd_db.sh

#运行脚本加载数据初次导入:

ods_to_dwd_db.sh first 2020-06-14

#运行脚本加载数据每日导入:

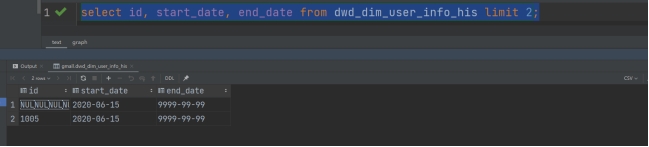

ods_to_dwd_db.sh all 2020-06-15查看数据是否导入:

select id, start_date, end_date from dwd_dim_user_info_his limit 2;

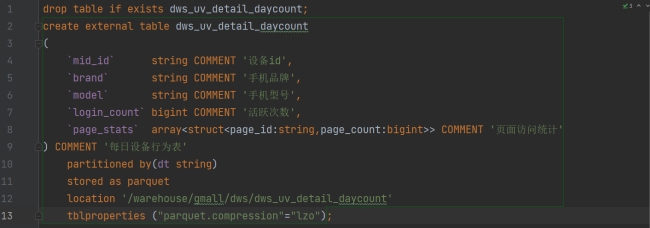

(6).建DWS层所有主题对象当天的汇总行为表;

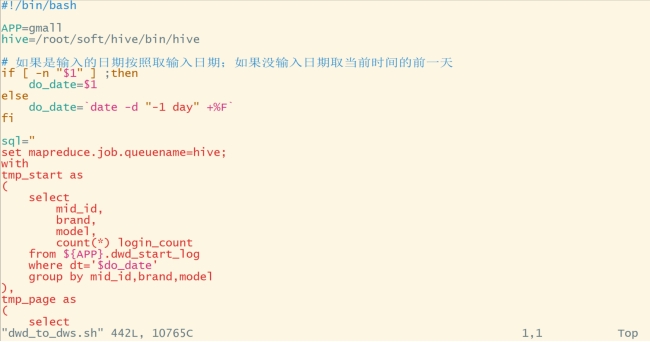

仅展示部分sql代码,创建DWS加载数据脚本:vim dwd_to_dws.sh

#!/bin/bash

APP=gmall

hive=/root/soft/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set mapreduce.job.queuename=hive;

with

tmp_start as

(

select

mid_id,

brand,

model,

count(*) login_count

from ${APP}.dwd_start_log

where dt='$do_date'

group by mid_id,brand,model

),

tmp_page as

(

select

mid_id,

brand,

model,

collect_set(named_struct('page_id',page_id,'page_count',page_count)) page_stats

from

(

select

mid_id,

brand,

model,

page_id,

count(*) page_count

from ${APP}.dwd_page_log

where dt='$do_date'

group by mid_id,brand,model,page_id

)tmp

group by mid_id,brand,model

)

insert overwrite table ${APP}.dws_uv_detail_daycount partition(dt='$do_date')

select

nvl(tmp_start.mid_id,tmp_page.mid_id),

nvl(tmp_start.brand,tmp_page.brand),

nvl(tmp_start.model,tmp_page.model),

tmp_start.login_count,

tmp_page.page_stats

from tmp_start

full outer join tmp_page

on tmp_start.mid_id=tmp_page.mid_id

and tmp_start.brand=tmp_page.brand

and tmp_start.model=tmp_page.model;

with

tmp_login as

(

select

user_id,

count(*) login_count

from ${APP}.dwd_start_log

where dt='$do_date'

and user_id is not null

group by user_id

),

tmp_cart as

(

select

user_id,

count(*) cart_count

from ${APP}.dwd_action_log

where dt='$do_date'

and user_id is not null

and action_id='cart_add'

group by user_id

),tmp_order as

(

select

user_id,

count(*) order_count,

sum(final_total_amount) order_amount

from ${APP}.dwd_fact_order_info

where dt='$do_date'

group by user_id

) ,

tmp_payment as

(

select

user_id,

count(*) payment_count,

sum(payment_amount) payment_amount

from ${APP}.dwd_fact_payment_info

where dt='$do_date'

group by user_id

),

tmp_order_detail as

(

select

user_id,

collect_set(named_struct('sku_id',sku_id,'sku_num',sku_num,'order_count',order_count,'order_amount',order_amount)) order_stats

from

(

select

user_id,

sku_id,

sum(sku_num) sku_num,

count(*) order_count,

cast(sum(final_amount_d) as decimal(20,2)) order_amount

from ${APP}.dwd_fact_order_detail

where dt='$do_date'

group by user_id,sku_id

)tmp

group by user_id

)

insert overwrite table ${APP}.dws_user_action_daycount partition(dt='$do_date')

select

tmp_login.user_id,

login_count,

nvl(cart_count,0),

nvl(order_count,0),

nvl(order_amount,0.0),

nvl(payment_count,0),

nvl(payment_amount,0.0),

order_stats

from tmp_login

left outer join tmp_cart on tmp_login.user_id=tmp_cart.user_id

left outer join tmp_order on tmp_login.user_id=tmp_order.user_id

left outer join tmp_payment on tmp_login.user_id=tmp_payment.user_id

left outer join tmp_order_detail on tmp_login.user_id=tmp_order_detail.user_id;

with

tmp_order as

(

select

sku_id,

count(*) order_count,

sum(sku_num) order_num,

sum(final_amount_d) order_amount

from ${APP}.dwd_fact_order_detail

where dt='$do_date'

group by sku_id

),

tmp_payment as

(

select

sku_id,

count(*) payment_count,

sum(sku_num) payment_num,

sum(final_amount_d) payment_amount

from ${APP}.dwd_fact_order_detail

where (dt='$do_date'

or dt=date_add('$do_date',-1))

and order_id in

(

select

id

from ${APP}.dwd_fact_order_info

where (dt='$do_date'

or dt=date_add('$do_date',-1))

and date_format(payment_time,'yyyy-MM-dd')='$do_date'

)

group by sku_id

),

tmp_refund as

(

select

sku_id,

count(*) refund_count,

sum(refund_num) refund_num,

sum(refund_amount) refund_amount

from ${APP}.dwd_fact_order_refund_info

where dt='$do_date'

group by sku_id

),

tmp_cart as

(

select

item sku_id,

count(*) cart_count

from ${APP}.dwd_action_log

where dt='$do_date'

and user_id is not null

and action_id='cart_add'

group by item

),tmp_favor as

(

select

item sku_id,

count(*) favor_count

from ${APP}.dwd_action_log

where dt='$do_date'

and user_id is not null

and action_id='favor_add'

group by item

),

tmp_appraise as

(

select

sku_id,

sum(if(appraise='1201',1,0)) appraise_good_count,

sum(if(appraise='1202',1,0)) appraise_mid_count,

sum(if(appraise='1203',1,0)) appraise_bad_count,

sum(if(appraise='1204',1,0)) appraise_default_count

from ${APP}.dwd_fact_comment_info

where dt='$do_date'

group by sku_id

)

insert overwrite table ${APP}.dws_sku_action_daycount partition(dt='$do_date')

select

sku_id,

sum(order_count),

sum(order_num),

sum(order_amount),

sum(payment_count),

sum(payment_num),

sum(payment_amount),

sum(refund_count),

sum(refund_num),

sum(refund_amount),

sum(cart_count),

sum(favor_count),

sum(appraise_good_count),

sum(appraise_mid_count),

sum(appraise_bad_count),

sum(appraise_default_count)

from

(

select

sku_id,

order_count,

order_num,

order_amount,

0 payment_count,

0 payment_num,

0 payment_amount,

0 refund_count,

0 refund_num,

0 refund_amount,

0 cart_count,

0 favor_count,

0 appraise_good_count,

0 appraise_mid_count,

0 appraise_bad_count,

0 appraise_default_count

from tmp_order

union all

select

sku_id,

0 order_count,

0 order_num,

0 order_amount,

payment_count,

payment_num,

payment_amount,

0 refund_count,

0 refund_num,

0 refund_amount,

0 cart_count,

0 favor_count,

0 appraise_good_count,

0 appraise_mid_count,

0 appraise_bad_count,

0 appraise_default_count

from tmp_payment

union all

select

sku_id,

0 order_count,

0 order_num,

0 order_amount,

0 payment_count,

0 payment_num,

0 payment_amount,

refund_count,

refund_num,

refund_amount,

0 cart_count,

0 favor_count,

0 appraise_good_count,

0 appraise_mid_count,

0 appraise_bad_count,

0 appraise_default_count

from tmp_refund

union all

select

sku_id,

0 order_count,

0 order_num,

0 order_amount,

0 payment_count,

0 payment_num,

0 payment_amount,

0 refund_count,

0 refund_num,

0 refund_amount,

cart_count,

0 favor_count,

0 appraise_good_count,

0 appraise_mid_count,

0 appraise_bad_count,

0 appraise_default_count

from tmp_cart

union all

select

sku_id,

0 order_count,

0 order_num,

0 order_amount,

0 payment_count,

0 payment_num,

0 payment_amount,

0 refund_count,

0 refund_num,

0 refund_amount,

0 cart_count,

favor_count,

0 appraise_good_count,

0 appraise_mid_count,

0 appraise_bad_count,

0 appraise_default_count

from tmp_favor

union all

select

sku_id,

0 order_count,

0 order_num,

0 order_amount,

0 payment_count,

0 payment_num,

0 payment_amount,

0 refund_count,

0 refund_num,

0 refund_amount,

0 cart_count,

0 favor_count,

appraise_good_count,

appraise_mid_count,

appraise_bad_count,

appraise_default_count

from tmp_appraise

)tmp

group by sku_id;

with

tmp_login as

(

select

area_code,

count(*) login_count

from ${APP}.dwd_start_log

where dt='$do_date'

group by area_code

),

tmp_op as

(

select

province_id,

sum(if(date_format(create_time,'yyyy-MM-dd')='$do_date',1,0)) order_count,

sum(if(date_format(create_time,'yyyy-MM-dd')='$do_date',final_total_amount,0)) order_amount,

sum(if(date_format(payment_time,'yyyy-MM-dd')='$do_date',1,0)) payment_count,

sum(if(date_format(payment_time,'yyyy-MM-dd')='$do_date',final_total_amount,0)) payment_amount

from ${APP}.dwd_fact_order_info

where (dt='$do_date' or dt=date_add('$do_date',-1))

group by province_id

)

insert overwrite table ${APP}.dws_area_stats_daycount partition(dt='$do_date')

select

pro.id,

pro.province_name,

pro.area_code,

pro.iso_code,

pro.region_id,

pro.region_name,

nvl(tmp_login.login_count,0),

nvl(tmp_op.order_count,0),

nvl(tmp_op.order_amount,0.0),

nvl(tmp_op.payment_count,0),

nvl(tmp_op.payment_amount,0.0)

from ${APP}.dwd_dim_base_province pro

left join tmp_login on pro.area_code=tmp_login.area_code

left join tmp_op on pro.id=tmp_op.province_id;

with

tmp_op as

(

select

activity_id,

sum(if(date_format(create_time,'yyyy-MM-dd')='$do_date',1,0)) order_count,

sum(if(date_format(create_time,'yyyy-MM-dd')='$do_date',final_total_amount,0)) order_amount,

sum(if(date_format(payment_time,'yyyy-MM-dd')='$do_date',1,0)) payment_count,

sum(if(date_format(payment_time,'yyyy-MM-dd')='$do_date',final_total_amount,0)) payment_amount

from ${APP}.dwd_fact_order_info

where (dt='$do_date' or dt=date_add('$do_date',-1))

and activity_id is not null

group by activity_id

),

tmp_display as

(

select

item activity_id,

count(*) display_count

from ${APP}.dwd_display_log

where dt='$do_date'

and item_type='activity_id'

group by item

),

tmp_activity as

(

select

*

from ${APP}.dwd_dim_activity_info

where dt='$do_date'

)

insert overwrite table ${APP}.dws_activity_info_daycount partition(dt='$do_date')

select

nvl(tmp_op.activity_id,tmp_display.activity_id),

tmp_activity.activity_name,

tmp_activity.activity_type,

tmp_activity.start_time,

tmp_activity.end_time,

tmp_activity.create_time,

tmp_display.display_count,

tmp_op.order_count,

tmp_op.order_amount,

tmp_op.payment_count,

tmp_op.payment_amount

from tmp_op

full outer join tmp_display on tmp_op.activity_id=tmp_display.activity_id

left join tmp_activity on nvl(tmp_op.activity_id,tmp_display.activity_id)=tmp_activity.id;

"

$hive -e "$sql"

#赋权限:

chmod 777 dwd_to_dws.sh

#运行脚本加载数据:

dwd_to_dws.sh 2020-06-15查看导入数据:

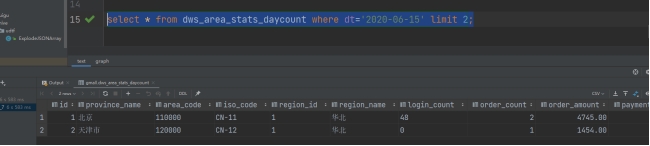

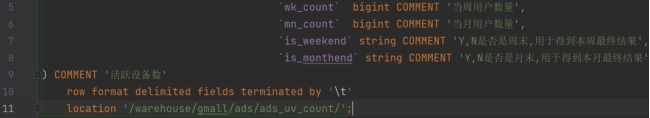

select * from dws_area_stats_daycount where dt='2020-06-15' limit 2;

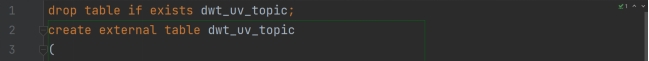

(7).建DWT层所有主题对象的累积行为表;

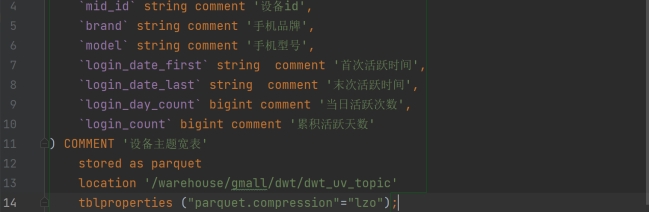

仅展示部分sql代码,创建DWT数据加载脚本:vim dws_to_dwt.sh

#!/bin/bash

APP=gmall

hive=/root/soft/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set mapreduce.job.queuename=hive;

insert overwrite table ${APP}.dwt_uv_topic

select

nvl(new.mid_id,old.mid_id),

nvl(new.model,old.model),

nvl(new.brand,old.brand),

if(old.mid_id is null,'$do_date',old.login_date_first),

if(new.mid_id is not null,'$do_date',old.login_date_last),

if(new.mid_id is not null, new.login_count,0),

nvl(old.login_count,0)+if(new.login_count>0,1,0)

from

(

select

*

from ${APP}.dwt_uv_topic

)old

full outer join

(

select

*

from ${APP}.dws_uv_detail_daycount

where dt='$do_date'

)new

on old.mid_id=new.mid_id;

insert overwrite table ${APP}.dwt_user_topic

select

nvl(new.user_id,old.user_id),

if(old.login_date_first is null and new.login_count>0,'$do_date',old.login_date_first),

if(new.login_count>0,'$do_date',old.login_date_last),

nvl(old.login_count,0)+if(new.login_count>0,1,0),

nvl(new.login_last_30d_count,0),

if(old.order_date_first is null and new.order_count>0,'$do_date',old.order_date_first),

if(new.order_count>0,'$do_date',old.order_date_last),

nvl(old.order_count,0)+nvl(new.order_count,0),

nvl(old.order_amount,0)+nvl(new.order_amount,0),

nvl(new.order_last_30d_count,0),

nvl(new.order_last_30d_amount,0),

if(old.payment_date_first is null and new.payment_count>0,'$do_date',old.payment_date_first),

if(new.payment_count>0,'$do_date',old.payment_date_last),

nvl(old.payment_count,0)+nvl(new.payment_count,0),

nvl(old.payment_amount,0)+nvl(new.payment_amount,0),

nvl(new.payment_last_30d_count,0),

nvl(new.payment_last_30d_amount,0)

from

${APP}.dwt_user_topic old

full outer join

(

select

user_id,

sum(if(dt='$do_date',login_count,0)) login_count,

sum(if(dt='$do_date',order_count,0)) order_count,

sum(if(dt='$do_date',order_amount,0)) order_amount,

sum(if(dt='$do_date',payment_count,0)) payment_count,

sum(if(dt='$do_date',payment_amount,0)) payment_amount,

sum(if(login_count>0,1,0)) login_last_30d_count,

sum(order_count) order_last_30d_count,

sum(order_amount) order_last_30d_amount,

sum(payment_count) payment_last_30d_count,

sum(payment_amount) payment_last_30d_amount

from ${APP}.dws_user_action_daycount

where dt>=date_add( '$do_date',-30)

group by user_id

)new

on old.user_id=new.user_id;

insert overwrite table ${APP}.dwt_sku_topic

select

nvl(new.sku_id,old.sku_id),

sku_info.spu_id,

nvl(new.order_count30,0),

nvl(new.order_num30,0),

nvl(new.order_amount30,0),

nvl(old.order_count,0) + nvl(new.order_count,0),

nvl(old.order_num,0) + nvl(new.order_num,0),

nvl(old.order_amount,0) + nvl(new.order_amount,0),

nvl(new.payment_count30,0),

nvl(new.payment_num30,0),

nvl(new.payment_amount30,0),

nvl(old.payment_count,0) + nvl(new.payment_count,0),

nvl(old.payment_num,0) + nvl(new.payment_num,0),

nvl(old.payment_amount,0) + nvl(new.payment_amount,0),

nvl(new.refund_count30,0),

nvl(new.refund_num30,0),

nvl(new.refund_amount30,0),

nvl(old.refund_count,0) + nvl(new.refund_count,0),

nvl(old.refund_num,0) + nvl(new.refund_num,0),

nvl(old.refund_amount,0) + nvl(new.refund_amount,0),

nvl(new.cart_count30,0),

nvl(old.cart_count,0) + nvl(new.cart_count,0),

nvl(new.favor_count30,0),

nvl(old.favor_count,0) + nvl(new.favor_count,0),

nvl(new.appraise_good_count30,0),

nvl(new.appraise_mid_count30,0),

nvl(new.appraise_bad_count30,0),

nvl(new.appraise_default_count30,0) ,

nvl(old.appraise_good_count,0) + nvl(new.appraise_good_count,0),

nvl(old.appraise_mid_count,0) + nvl(new.appraise_mid_count,0),

nvl(old.appraise_bad_count,0) + nvl(new.appraise_bad_count,0),

nvl(old.appraise_default_count,0) + nvl(new.appraise_default_count,0)

from

(

select

sku_id,

spu_id,

order_last_30d_count,

order_last_30d_num,

order_last_30d_amount,

order_count,

order_num,

order_amount ,

payment_last_30d_count,

payment_last_30d_num,

payment_last_30d_amount,

payment_count,

payment_num,

payment_amount,

refund_last_30d_count,

refund_last_30d_num,

refund_last_30d_amount,

refund_count,

refund_num,

refund_amount,

cart_last_30d_count,

cart_count,

favor_last_30d_count,

favor_count,

appraise_last_30d_good_count,

appraise_last_30d_mid_count,

appraise_last_30d_bad_count,

appraise_last_30d_default_count,

appraise_good_count,

appraise_mid_count,

appraise_bad_count,

appraise_default_count

from ${APP}.dwt_sku_topic

)old

full outer join

(

select

sku_id,

sum(if(dt='$do_date', order_count,0 )) order_count,

sum(if(dt='$do_date',order_num ,0 )) order_num,

sum(if(dt='$do_date',order_amount,0 )) order_amount ,

sum(if(dt='$do_date',payment_count,0 )) payment_count,

sum(if(dt='$do_date',payment_num,0 )) payment_num,

sum(if(dt='$do_date',payment_amount,0 )) payment_amount,

sum(if(dt='$do_date',refund_count,0 )) refund_count,

sum(if(dt='$do_date',refund_num,0 )) refund_num,

sum(if(dt='$do_date',refund_amount,0 )) refund_amount,

sum(if(dt='$do_date',cart_count,0 )) cart_count,

sum(if(dt='$do_date',favor_count,0 )) favor_count,

sum(if(dt='$do_date',appraise_good_count,0 )) appraise_good_count,

sum(if(dt='$do_date',appraise_mid_count,0 ) ) appraise_mid_count ,

sum(if(dt='$do_date',appraise_bad_count,0 )) appraise_bad_count,

sum(if(dt='$do_date',appraise_default_count,0 )) appraise_default_count,

sum(order_count) order_count30 ,

sum(order_num) order_num30,

sum(order_amount) order_amount30,

sum(payment_count) payment_count30,

sum(payment_num) payment_num30,

sum(payment_amount) payment_amount30,

sum(refund_count) refund_count30,

sum(refund_num) refund_num30,

sum(refund_amount) refund_amount30,

sum(cart_count) cart_count30,

sum(favor_count) favor_count30,

sum(appraise_good_count) appraise_good_count30,

sum(appraise_mid_count) appraise_mid_count30,

sum(appraise_bad_count) appraise_bad_count30,

sum(appraise_default_count) appraise_default_count30

from ${APP}.dws_sku_action_daycount

where dt >= date_add ('$do_date', -30)

group by sku_id

)new

on new.sku_id = old.sku_id

left join

(select * from ${APP}.dwd_dim_sku_info where dt='$do_date') sku_info

on nvl(new.sku_id,old.sku_id)= sku_info.id;

insert overwrite table ${APP}.dwt_activity_topic

select

nvl(new.id,old.id),

nvl(new.activity_name,old.activity_name),

nvl(new.activity_type,old.activity_type),

nvl(new.start_time,old.start_time),

nvl(new.end_time,old.end_time),

nvl(new.create_time,old.create_time),

nvl(new.display_count,0),

nvl(new.order_count,0),

nvl(new.order_amount,0.0),

nvl(new.payment_count,0),

nvl(new.payment_amount,0.0),

nvl(new.display_count,0)+nvl(old.display_count,0),

nvl(new.order_count,0)+nvl(old.order_count,0),

nvl(new.order_amount,0.0)+nvl(old.order_amount,0.0),

nvl(new.payment_count,0)+nvl(old.payment_count,0),

nvl(new.payment_amount,0.0)+nvl(old.payment_amount,0.0)

from

(

select

*

from ${APP}.dwt_activity_topic

)old

full outer join

(

select

*

from ${APP}.dws_activity_info_daycount

where dt='$do_date'

)new

on old.id=new.id;

insert overwrite table ${APP}.dwt_area_topic

select

nvl(old.id,new.id),

nvl(old.province_name,new.province_name),

nvl(old.area_code,new.area_code),

nvl(old.iso_code,new.iso_code),

nvl(old.region_id,new.region_id),

nvl(old.region_name,new.region_name),

nvl(new.login_day_count,0),

nvl(new.login_last_30d_count,0),

nvl(new.order_day_count,0),

nvl(new.order_day_amount,0.0),

nvl(new.order_last_30d_count,0),

nvl(new.order_last_30d_amount,0.0),

nvl(new.payment_day_count,0),

nvl(new.payment_day_amount,0.0),

nvl(new.payment_last_30d_count,0),

nvl(new.payment_last_30d_amount,0.0)

from

(

select

*

from ${APP}.dwt_area_topic

)old

full outer join

(

select

id,

province_name,

area_code,

iso_code,

region_id,

region_name,

sum(if(dt='$do_date',login_count,0)) login_day_count,

sum(if(dt='$do_date',order_count,0)) order_day_count,

sum(if(dt='$do_date',order_amount,0.0)) order_day_amount,

sum(if(dt='$do_date',payment_count,0)) payment_day_count,

sum(if(dt='$do_date',payment_amount,0.0)) payment_day_amount,

sum(login_count) login_last_30d_count,

sum(order_count) order_last_30d_count,

sum(order_amount) order_last_30d_amount,

sum(payment_count) payment_last_30d_count,

sum(payment_amount) payment_last_30d_amount

from ${APP}.dws_area_stats_daycount

where dt>=date_add('$do_date',-30)

group by id,province_name,area_code,iso_code,region_id,region_name

)new

on old.id=new.id;

"

$hive -e "$sql"

#赋权限:

chmod 777 dws_to_dwt.sh

#运行脚本加载数据:

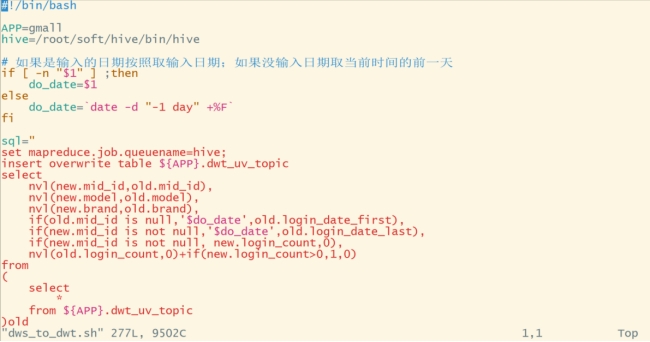

dws_to_dwt.sh 2020-06-15查看导入数据:

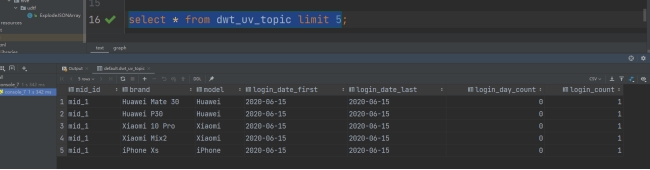

select * from dwt_uv_topic limit 5;

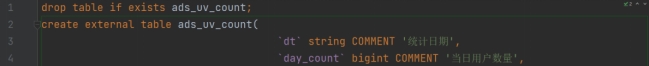

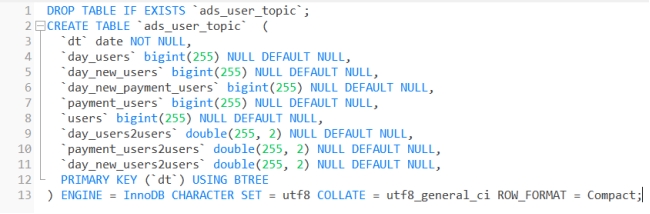

(8).建ADS层所有主题指标分析表;

仅展示部分sql代码,创建ADS层数据加载脚本:vim dwt_to_ads.sh

#!/bin/bash

hive=/root/soft/hive/bin/hive

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set mapreduce.job.queuename=hive;

insert into table ${APP}.ads_uv_count

select

'$do_date' dt,

daycount.ct,

wkcount.ct,

mncount.ct,

if(date_add(next_day('$do_date','MO'),-1)='$do_date','Y','N') ,

if(last_day('$do_date')='$do_date','Y','N')

from

(

select

'$do_date' dt,

count(*) ct

from ${APP}.dwt_uv_topic

where login_date_last='$do_date'

)daycount join

(

select

'$do_date' dt,

count (*) ct

from ${APP}.dwt_uv_topic

where login_date_last>=date_add(next_day('$do_date','MO'),-7)

and login_date_last<= date_add(next_day('$do_date','MO'),-1)

) wkcount on daycount.dt=wkcount.dt

join

(

select

'$do_date' dt,

count (*) ct

from ${APP}.dwt_uv_topic

where date_format(login_date_last,'yyyy-MM')=date_format('$do_date','yyyy-MM')

)mncount on daycount.dt=mncount.dt;

insert into table ${APP}.ads_new_mid_count

select

login_date_first,

count(*)

from ${APP}.dwt_uv_topic

where login_date_first='$do_date'

group by login_date_first;

insert into table ${APP}.ads_silent_count

select

'$do_date',

count(*)

from ${APP}.dwt_uv_topic

where login_date_first=login_date_last

and login_date_last<=date_add('$do_date',-7);

insert into table ${APP}.ads_back_count

select

'$do_date',

concat(date_add(next_day('$do_date','MO'),-7),'_', date_add(next_day('$do_date','MO'),-1)),

count(*)

from

(

select

mid_id

from ${APP}.dwt_uv_topic

where login_date_last>=date_add(next_day('$do_date','MO'),-7)

and login_date_last<= date_add(next_day('$do_date','MO'),-1)

and login_date_first<date_add(next_day('$do_date','MO'),-7)

)current_wk

left join

(

select

mid_id

from ${APP}.dws_uv_detail_daycount

where dt>=date_add(next_day('$do_date','MO'),-7*2)

and dt<= date_add(next_day('$do_date','MO'),-7-1)

group by mid_id

)last_wk

on current_wk.mid_id=last_wk.mid_id

where last_wk.mid_id is null;

insert into table ${APP}.ads_wastage_count

select

'$do_date',

count(*)

from

(

select

mid_id

from ${APP}.dwt_uv_topic

where login_date_last<=date_add('$do_date',-7)

group by mid_id

)t1;

insert into table ${APP}.ads_user_retention_day_rate

select

'$do_date',--统计日期

date_add('$do_date',-1),--新增日期

1,--留存天数

sum(if(login_date_first=date_add('$do_date',-1) and login_date_last='$do_date',1,0)),--$do_date的1日留存数

sum(if(login_date_first=date_add('$do_date',-1),1,0)),--$do_date新增

sum(if(login_date_first=date_add('$do_date',-1) and login_date_last='$do_date',1,0))/sum(if(login_date_first=date_add('$do_date',-1),1,0))*100

from ${APP}.dwt_uv_topic

union all

select

'$do_date',--统计日期

date_add('$do_date',-2),--新增日期

2,--留存天数

sum(if(login_date_first=date_add('$do_date',-2) and login_date_last='$do_date',1,0)),--$do_date的2日留存数

sum(if(login_date_first=date_add('$do_date',-2),1,0)),--$do_date新增

sum(if(login_date_first=date_add('$do_date',-2) and login_date_last='$do_date',1,0))/sum(if(login_date_first=date_add('$do_date',-2),1,0))*100

from ${APP}.dwt_uv_topic

union all

select

'$do_date',--统计日期

date_add('$do_date',-3),--新增日期

3,--留存天数

sum(if(login_date_first=date_add('$do_date',-3) and login_date_last='$do_date',1,0)),--$do_date的3日留存数

sum(if(login_date_first=date_add('$do_date',-3),1,0)),--$do_date新增

sum(if(login_date_first=date_add('$do_date',-3) and login_date_last='$do_date',1,0))/sum(if(login_date_first=date_add('$do_date',-3),1,0))*100

from ${APP}.dwt_uv_topic;

insert into table ${APP}.ads_continuity_wk_count

select

'$do_date',

concat(date_add(next_day('$do_date','MO'),-7*3),'_',date_add(next_day('$do_date','MO'),-1)),

count(*)

from

(

select

mid_id

from

(

select

mid_id

from ${APP}.dws_uv_detail_daycount

where dt>=date_add(next_day('$do_date','monday'),-7)

and dt<=date_add(next_day('$do_date','monday'),-1)

group by mid_id

union all

select

mid_id

from ${APP}.dws_uv_detail_daycount

where dt>=date_add(next_day('$do_date','monday'),-7*2)

and dt<=date_add(next_day('$do_date','monday'),-7-1)

group by mid_id

union all

select

mid_id

from ${APP}.dws_uv_detail_daycount

where dt>=date_add(next_day('$do_date','monday'),-7*3)

and dt<=date_add(next_day('$do_date','monday'),-7*2-1)

group by mid_id

)t1

group by mid_id

having count(*)=3

)t2;

insert into table ${APP}.ads_continuity_uv_count

select

'$do_date',

concat(date_add('$do_date',-6),'_','$do_date'),

count(*)

from

(

select mid_id

from

(

select mid_id

from

(

select

mid_id,

date_sub(dt,rank) date_dif

from

(

select

mid_id,

dt,

rank() over(partition by mid_id order by dt) rank

from ${APP}.dws_uv_detail_daycount

where dt>=date_add('$do_date',-6) and dt<='$do_date'

)t1

)t2

group by mid_id,date_dif

having count(*)>=3

)t3

group by mid_id

)t4;

insert into table ${APP}.ads_user_topic

select

'$do_date',

sum(if(login_date_last='$do_date',1,0)),

sum(if(login_date_first='$do_date',1,0)),

sum(if(payment_date_first='$do_date',1,0)),

sum(if(payment_count>0,1,0)),

count(*),

sum(if(login_date_last='$do_date',1,0))/count(*),

sum(if(payment_count>0,1,0))/count(*),

sum(if(login_date_first='$do_date',1,0))/sum(if(login_date_last='$do_date',1,0))

from ${APP}.dwt_user_topic;

with

tmp_uv as

(

select

'$do_date' dt,

sum(if(array_contains(pages,'home'),1,0)) home_count,

sum(if(array_contains(pages,'good_detail'),1,0)) good_detail_count

from

(

select

mid_id,

collect_set(page_id) pages

from ${APP}.dwd_page_log

where dt='$do_date'

and page_id in ('home','good_detail')

group by mid_id

)tmp

),

tmp_cop as

(

select

'$do_date' dt,

sum(if(cart_count>0,1,0)) cart_count,

sum(if(order_count>0,1,0)) order_count,

sum(if(payment_count>0,1,0)) payment_count

from ${APP}.dws_user_action_daycount

where dt='$do_date'

)

insert into table ${APP}.ads_user_action_convert_day

select

tmp_uv.dt,

tmp_uv.home_count,

tmp_uv.good_detail_count,

tmp_uv.good_detail_count/tmp_uv.home_count*100,

tmp_cop.cart_count,

tmp_cop.cart_count/tmp_uv.good_detail_count*100,

tmp_cop.order_count,

tmp_cop.order_count/tmp_cop.cart_count*100,

tmp_cop.payment_count,

tmp_cop.payment_count/tmp_cop.order_count*100

from tmp_uv

join tmp_cop

on tmp_uv.dt=tmp_cop.dt;

insert into table ${APP}.ads_product_info

select

'$do_date' dt,

sku_num,

spu_num

from

(

select

'$do_date' dt,

count(*) sku_num

from

${APP}.dwt_sku_topic

) tmp_sku_num

join

(

select

'$do_date' dt,

count(*) spu_num

from

(

select

spu_id

from

${APP}.dwt_sku_topic

group by

spu_id

) tmp_spu_id

) tmp_spu_num

on

tmp_sku_num.dt=tmp_spu_num.dt;

insert into table ${APP}.ads_product_sale_topN

select

'$do_date' dt,

sku_id,

payment_amount

from

${APP}.dws_sku_action_daycount

where

dt='$do_date'

order by payment_amount desc

limit 10;

insert into table ${APP}.ads_product_favor_topN

select

'$do_date' dt,

sku_id,

favor_count

from

${APP}.dws_sku_action_daycount

where

dt='$do_date'

order by favor_count desc

limit 10;

insert into table ${APP}.ads_product_cart_topN

select

'$do_date' dt,

sku_id,

cart_count

from

${APP}.dws_sku_action_daycount

where

dt='$do_date'

order by cart_count desc

limit 10;

insert into table ${APP}.ads_product_refund_topN

select

'$do_date',

sku_id,

refund_last_30d_count/payment_last_30d_count*100 refund_ratio

from ${APP}.dwt_sku_topic

order by refund_ratio desc

limit 10;

insert into table ${APP}.ads_appraise_bad_topN

select

'$do_date' dt,

sku_id,

appraise_bad_count/(appraise_good_count+appraise_mid_count+appraise_bad_count+appraise_default_count) appraise_bad_ratio

from

${APP}.dws_sku_action_daycount

where

dt='$do_date'

order by appraise_bad_ratio desc

limit 10;

insert into table ${APP}.ads_order_daycount

select

'$do_date',

sum(order_count),

sum(order_amount),

sum(if(order_count>0,1,0))

from ${APP}.dws_user_action_daycount

where dt='$do_date';

insert into table ${APP}.ads_payment_daycount

select

tmp_payment.dt,

tmp_payment.payment_count,

tmp_payment.payment_amount,

tmp_payment.payment_user_count,

tmp_skucount.payment_sku_count,

tmp_time.payment_avg_time

from

(

select

'$do_date' dt,

sum(payment_count) payment_count,

sum(payment_amount) payment_amount,

sum(if(payment_count>0,1,0)) payment_user_count

from ${APP}.dws_user_action_daycount

where dt='$do_date'

)tmp_payment

join

(

select

'$do_date' dt,

sum(if(payment_count>0,1,0)) payment_sku_count

from ${APP}.dws_sku_action_daycount

where dt='$do_date'

)tmp_skucount on tmp_payment.dt=tmp_skucount.dt

join

(

select

'$do_date' dt,

sum(unix_timestamp(payment_time)-unix_timestamp(create_time))/count(*)/60 payment_avg_time

from ${APP}.dwd_fact_order_info

where dt='$do_date'

and payment_time is not null

)tmp_time on tmp_payment.dt=tmp_time.dt;

with

tmp_order as

(

select

user_id,

order_stats_struct.sku_id sku_id,

order_stats_struct.order_count order_count

from ${APP}.dws_user_action_daycount lateral view explode(order_detail_stats) tmp as order_stats_struct

where date_format(dt,'yyyy-MM')=date_format('$do_date','yyyy-MM')

),

tmp_sku as

(

select

id,

tm_id,

category1_id,

category1_name

from ${APP}.dwd_dim_sku_info

where dt='$do_date'

)

insert into table ${APP}.ads_sale_tm_category1_stat_mn

select

tm_id,

category1_id,

category1_name,

sum(if(order_count>=1,1,0)) buycount,

sum(if(order_count>=2,1,0)) buyTwiceLast,

sum(if(order_count>=2,1,0))/sum( if(order_count>=1,1,0)) buyTwiceLastRatio,

sum(if(order_count>=3,1,0)) buy3timeLast ,

sum(if(order_count>=3,1,0))/sum( if(order_count>=1,1,0)) buy3timeLastRatio ,

date_format('$do_date' ,'yyyy-MM') stat_mn,

'$do_date' stat_date

from

(

select

tmp_order.user_id,

tmp_sku.category1_id,

tmp_sku.category1_name,

tmp_sku.tm_id,

sum(order_count) order_count

from tmp_order

join tmp_sku

on tmp_order.sku_id=tmp_sku.id

group by tmp_order.user_id,tmp_sku.category1_id,tmp_sku.category1_name,tmp_sku.tm_id

)tmp

group by tm_id, category1_id, category1_name;

insert into table ${APP}.ads_area_topic

select

'$do_date',

id,

province_name,

area_code,

iso_code,

region_id,

region_name,

login_day_count,

order_day_count,

order_day_amount,

payment_day_count,

payment_day_amount

from ${APP}.dwt_area_topic;

"

$hive -e "$sql"

# 赋权限:

chmod 777 dwt_to_ads.sh

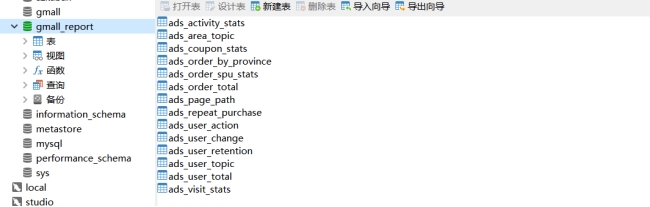

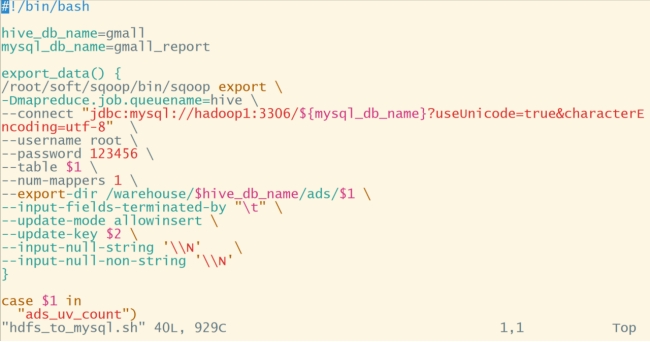

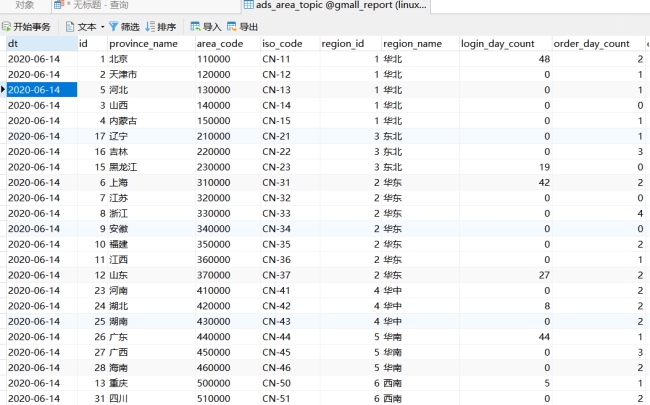

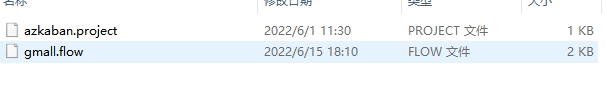

#运行脚本导入数据: